Lately we have merged a new input subsystem to the Crazyflie python client, it allows for more flexible input configuration like connecting more than one gamepad in training mode, or having external input like the LeapMotion appear as standard input. To test the flexibility of the new input system, on Friday, we implemented a ØMQ input driver. ØMQ is a library that permits to easily transmit messages between program. It is high speed, low latency and extremely easy to use. It has binding and implementation for a lot of programming language, which opens Crazyflie control to a lot more people and application.

The way it currently work is: You connect the graphical Crazyflie client and select ZMQ as input device. You connect the ØMQ socket and send json to it containing pitch/roll/yaw/thrust. And voila, you are controlling Crazyflie from your own program.

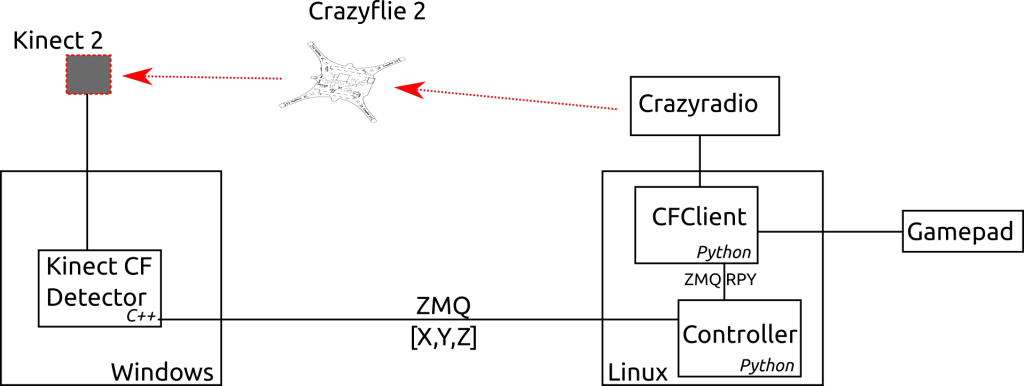

We just got a Kinect 2 and started playing around with it, suddenly the new ØMQ control became very useful. We already worked with the kinect long time ago so we had control code from back then. Also we wanted to quickly do a proof of concept so we started working with the Microsoft SDK to see what is possible, we are going to use libfreenect2 on Linux later. Finally we know how hard it was to control the thrust so we wanted a way to test pitch/roll first as a proof of concept. At the end of the day we ended up with this architecture in the office:

The C++ implementation in visual studio detects the Crazyflie and sends coordinate to the Controller python script. The controller script is a stripped down version of or previous kinect experiment and only runs PID control loops to control pitch and roll, trying to keep Crazyflie at a fixed coordinate in space. Finally the Crazyflie client is setup in training mode with ZMQ handling pitch and roll and the gamepad handling thust and yaw. The gamepad can take-over completely in case of problem.

Thanks to ZMQ resiliance we can stop any part of the system and start it again, the connections automagically reappears. So we ended up with the following workflow:

- Fly in the Kinect detection area

- hand-over pitch/roll to the controller loop

- When the Crazyflie starts oscillating or going away, take over control and land

- Stop controller, modify things, restart

This was quite painless and nice. We ended-up connecting together very different systems and they just worked. We got so interested by the experiment that we now have a full ZMQ Crazyflie server on the work that would allow other program to do what is possible with the standard Crazyflie API: scan for Crazyflie, connect, read and write log and params.

Is there any documentation on how to set up training mode? I’m trying to do a similar thing with the Kinect & Crazyflie 2.0, would be very interested to see how you guys set up the control sharing between ZMQ and the controller.

I’ve put together some basic doc on the wiki:

http://wiki.bitcraze.se/doc:crazyflie:client:pycfclient:index#input_mux

But currently there’s some issues with this, so it’s switched off by the default and has to be enabled (see the link).

Basically you switch it on, select the “TakeOverSelective” mux, first select the gamepad (de-select if already selected) then select the ZMQ. This will result in roll/pitch being controlled by ZMQ and the rest from the gamepad. And if you press Alt1 on the gamepad you will take over control of roll/pitch from ZMQ.

A tip: Don’t try to set the mapping for the gamepad after the mux is activated, make sure that you have already done this and that the “preferred” mapping is already set for the gamepad (this is where some of the bugs are…)

If you want some other mixing or other features the code is in this file:

https://github.com/bitcraze/crazyflie-clients-python/blob/develop/lib/cfclient/utils/mux/takeoverselectivemux.py

If you want to change what axes are taken from what device or the take-over “trigger” button this can be done directly in the code for now.