I’ve spent the last 5 years of my career at Microsoft on the team responsible for HoloLens and Windows Mixed Reality VR headsets. Typically, augmented reality applications deal with creating and manipulating digital content in the context of real-world surroundings. I thought it’d be interesting to explore some applications of using an augmented reality device to manipulate and control physical objects and have them interact with the real world and/or digital content.

Phase 1: Gesture Input

The HoloLens SDK has APIs for consuming hand gestures as input. For the first phase of this project, I modified the existing Windows UAP/UWP client to handle these gestures and convert them to CRTP setpoints. I used the “manipulation gesture” which provides offsets in three dimensions for a tap-and-drag gesture, from the point in space where the initial tap occurred. These three degrees of freedom are mapped to thrust, pitch and roll.

For the curious, there’s an article on my website with details about the implementation and source code. Here’s a YouTube video where I explain the concept and show a couple of quick demos.

As you can see in the first demo in the video, this works but isn’t entirely useful or practical. The HoloLens accounts for head movements (otherwise moving the head to the left would produce the same offset as moving the hand to the right, requiring the user to keep his or her head very still) but the user must still take care to keep the hand in the field of view of the device’s cameras. Once the gesture is released (or the hand goes out of view) the failsafe engages and the Crazyflie drops to the ground. And of course, lack of yaw control cripples the ability to control the Crazyflie.

Phase 2: Position Hold

Adding a flow deck makes for a more compelling user experience, as seen in the second demo in the video above. The Crazyflie uses the sensors on the flow deck to hold its position. With this functionality, the user is free to move about the room and make shorter “adjustment” hand gestures, instead of needing to hold very still. In this mode, the gesture’s degrees of freedom map to an x/y velocity and a vertical offset from the current z-depth.

This is a step in the right direction, but still has limitations. The HoloLens doesn’t know where it is in space relative to the Crazyflie. A gesture in the y axis relative to the device will always result in a movement in the y direction of the Crazyflie, which begins to feel unnatural if the user moves around. Ideally, gestures would cause the crazyflie to move in the same direction relative to the user, not relative to the ‘front’ of the Crazyflie. Also, there’s still no control over yaw.

The flow deck has some limitation as well: The z-range only goes to 2 meters with any accuracy. The flow sensor (for lateral stabilization) has a strong dependency on the patterns on the floor below. A flow sensor is a camera that relies on measuring pixel deltas from frame to frame, so if the floor is blank or has a repeating pattern, it can be difficult to hold position properly.

Despite these limitations, using hand gestures to control the Crazyflie with a flow deck installed as actually quite fun and surprisingly easy.

Phase 3 and Beyond: Future Work & Ideas

I’m currently working on some new features that I hope will open the door for more interesting applications. All of what follows is a work in progress, and not yet implemented or functional. Dream with me!

Shared Coordinate System

The next phase (currently a work in progress) is to get the HoloLens and the Crazyflie into a shared coordinate system. Having spatial awareness between the HoloLens and the Crazyflie opens up some very exciting scenarios:

- The orientation problem could be improved: transforms could be applied to gestures to cause the Crazyflie to respond to commands in the user’s frame of reference (so ‘pushing’ away from one’s self would cause the Crazyflie to fly away from the user, instead of whatever direction is ‘forward’ to the Crazyflie’s perspective).

- A ‘follow me’ mode, where the crazyflie autonomously follows behind a user as he or she moves throughout the space.

- Ability to walk around and manually set waypoints by selecting points of interest in the environment.

The Loco Positioning System is a natural fit here. A setup step (where a spatial anchor or similar is established at same physical position and orientation as the LPS origin) and a simple transform for scale and orientation (HoloLens and the Crazyflie define X,Y,Z differently) would allow the HoloLens and Crazyflie to operate in a shared coordinate system. One could also use the webcam on the HoloLens along with computer vision techniques to track the Crazyflie, but that would require constant line of sight from the HoloLens to the Crazyflie.

Obstacle Detection/Avoidance

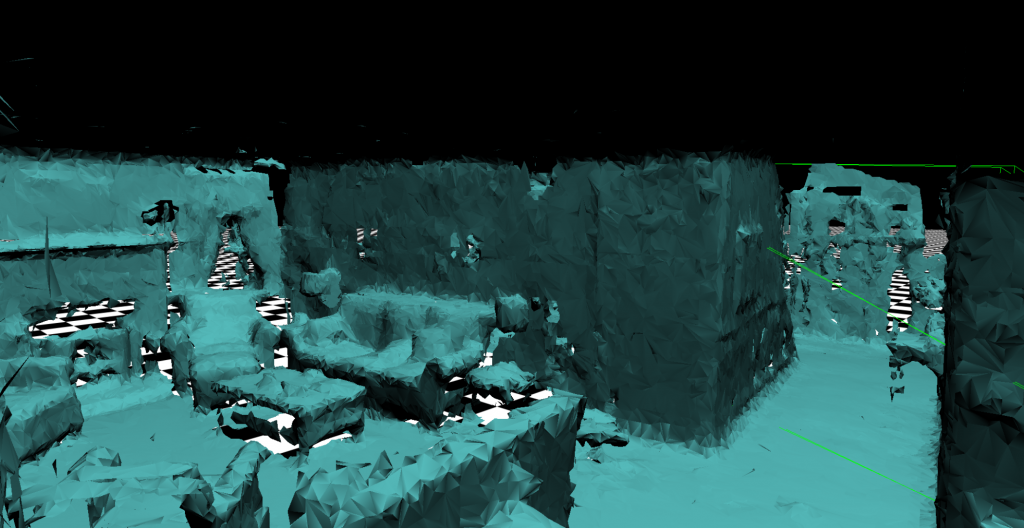

The next step after establishing a shared coordinate system is to use the HoloLens for obstacle detection and avoidance. The HoloLens has the ability to map surfaces in real time and position itself in that map (SLAM). Logic could be added to the HoloLens to consume this surface map and adjust pathing/setpoints to avoid these obstacles without reducing the overall compute/power budget of the Crazyflie itself.

Swarm Control and Manipulation

As a simple extension of the shared coordinate system (and what Bitcraze has been doing with TDoA and swarming lately) the HoloLens could be used to manipulate individual Crazyflies within a swarm through raycasting (the same technique used to gaze at, select and move specific holograms in the digital domain). Or perhaps a swarm could be controlled to move out of the way as a user passes through the swarm, and return to formation afterward.

Augmenting with Digital Content

All scenarios discussed thus far have dealt with using the HoloLens as an input and localization device, but its primary job is to project digital content into the real world. I can think of applications such as:

- Games

- Flying around through a digital obstacle course

- First person shooter or space invaders type game (Crazyflie moves around to avoid user or fire rendered laser pulses at user, etc)

- Diagnostic/development tools

- Overlaying some diagnostic information (such as battery life) above the Crazyflie (or each Crazyflie in a swarm)

- Set or visualize/verify the position of the LPS nodes in space

- Visualize the position of the Crazyflie as reported by LPS, to observe error or drift in real time

Conclusion

There’s no shortage of interesting applications related to blending augmented reality with the Crazyflie, but there’s quite a bit of work ahead to get there. Keep an eye on the Bitcraze blog or the forums for updates and news on this effort.

I’d love to hear what ideas you have for combining augmented reality devices with physical devices like the Crazyflie. Leave a comment with thoughts, suggestions, or any other relevant work!