Today’s blogpost comes from Joseph La Delfa, who is currently doing his Industrial Post-Doc with Bitcraze.

The Qi deck and the Brushless charging dock allow you to start charging a Crazyflie quickly, without having to fiddle with a plug or a battery change. But when you need to charge 10 or more Crazyflies 2.x and don’t want the weight penalty of the Qi deck then some some other solutions are needed.

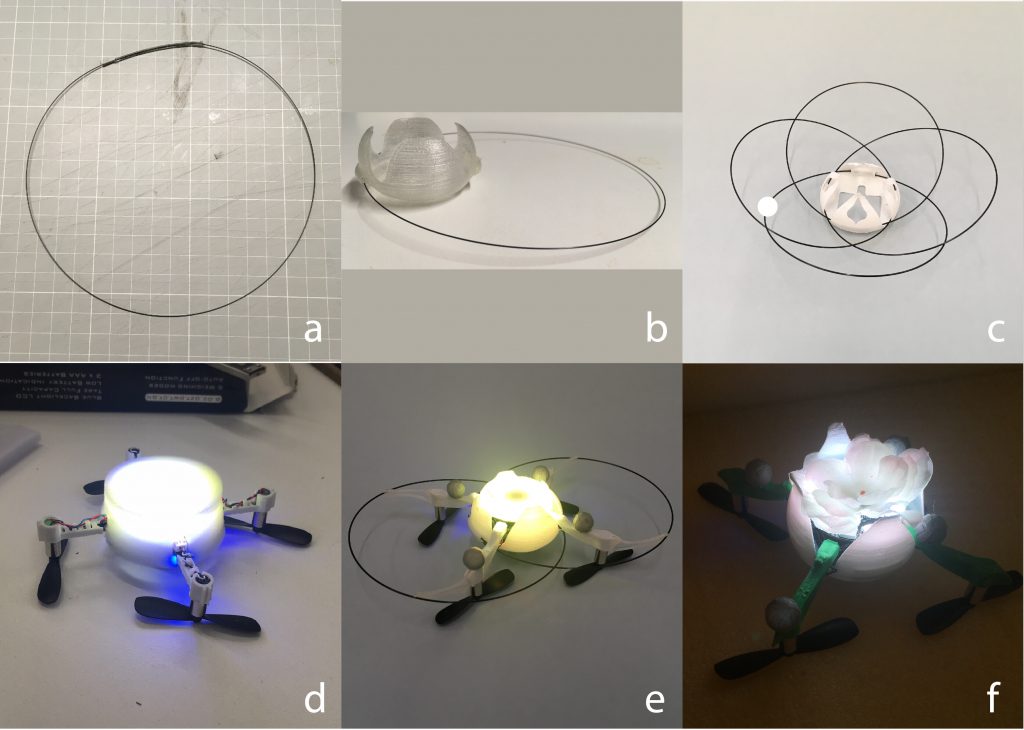

This blog post is about a couple of chargers I made for the Crazyflie 2.x for my research prototypes. I research interaction design, which often means building something new and then putting in the hands of a user and getting them to try it out. What is important in these scenarios is that when there is unexpected behavior, they don’t think that the prototype is bugging out or broken. One way to prevent this is to make things that have a higher quality to raise the expectations of the user. This can help them stay immersed in the interaction and not look over to me when there is unexpected behavior and say… “is this working properly?”

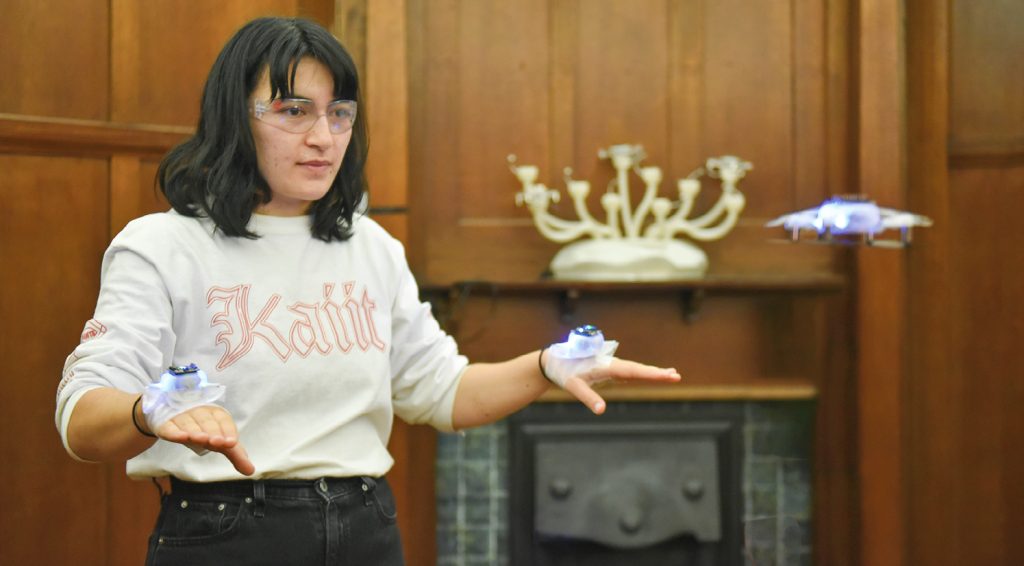

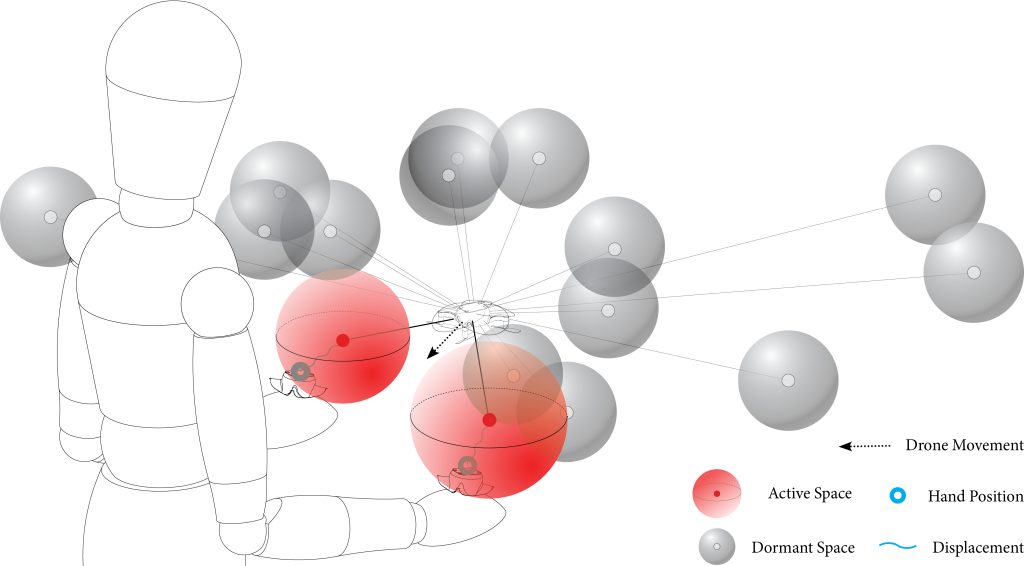

Wiring Harness for Drone Chi

This charger is essentially a pair of JST 2-pin extensions for a 1S battery charger that I soldered together. Then weaved them through some fake hanging plants. With the drones already looking like flowers for the Drone Chi project, they blended well into the fake plants and all the wires were well hidden. When you wanted to fly, you would disconnect the battery from the wiring harness. Plus it brings the nice experience of picking a flower from a bush before you start flying!

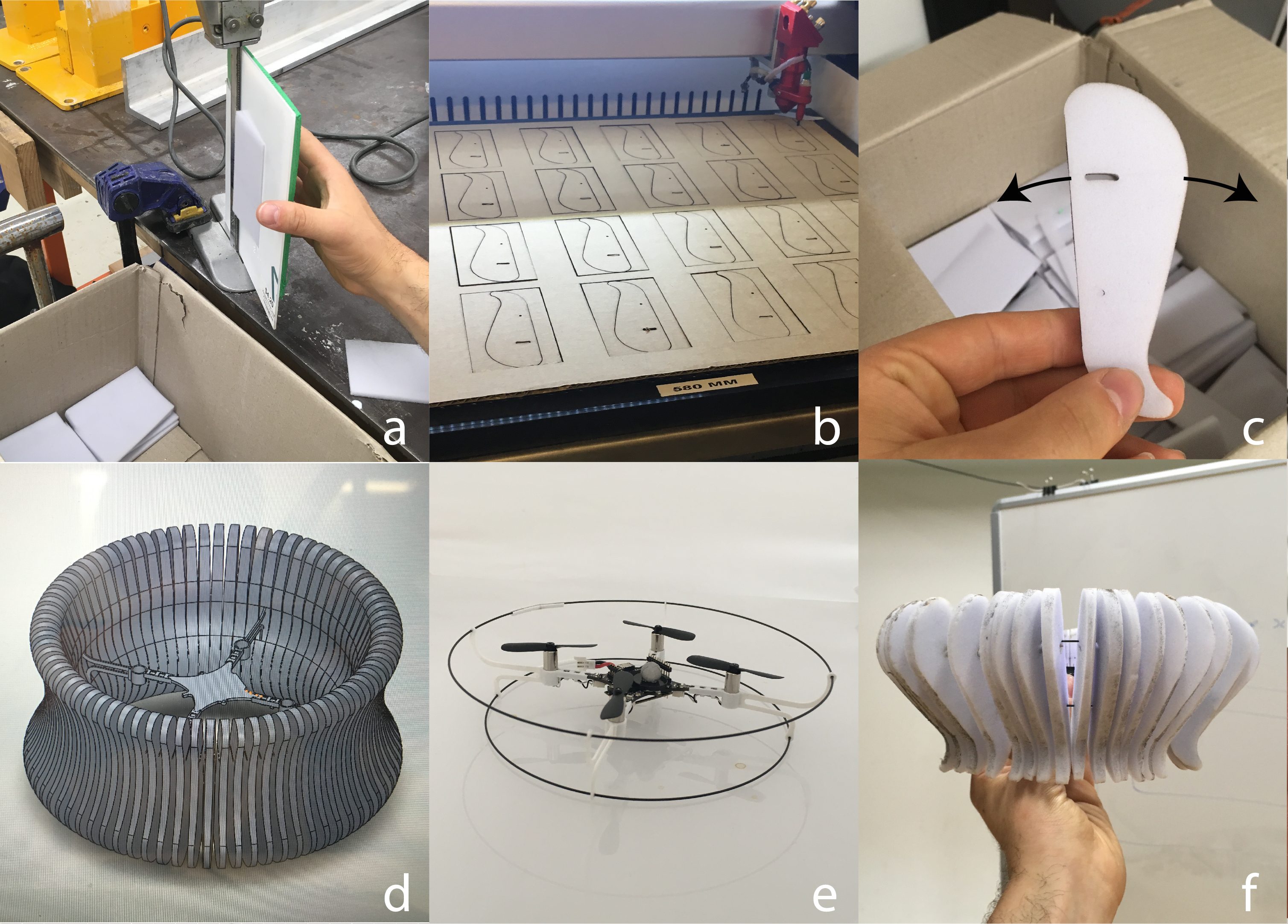

Magnetic Mantle Piece Charger for How To Train Your Drone

This charger allows 10 Crazyflies to charge from their USB ports, but on a bit of a statement piece charger that lived in the lounge room of a group of friends who were participating in a month-long user study during the How to Train Your Drone Project. This charger contained a powered USB hub with cables running through each of the medusa like tubes rising from the base. What made this charger special was the magnetic USB charging adapters (available widely on e-bay, amazon, temu etc) that were plugged into the the USB ports on the drone. These allowed you to securely place the drone on the charger in one action as the magnetic cables integrated into the charger were always close enough to the drone when you set it down, giving you a satisfying * click * every time! They also gave off a eerie blue glow which I think matched the Crazyflie well.