Since the end of 2024, we’ve been putting effort into spreading out our manufacturing. With international trade rules rapidly changing, it felt like the right moment to expand our production footprint. Doing this helps us keep stock more stable, react faster to demand, and makes life easier for you when it comes to potential import-related costs.

To make it happen, we’ve been working closely with our long-time partner in China, Seeed Studio. They’ve been helping us move the production of some of our items to Vietnam, where exciting new opportunities have opened up. This way, we can keep the same quality and reliability you’re used to while spreading out production across more locations, which makes our supply chain stronger.

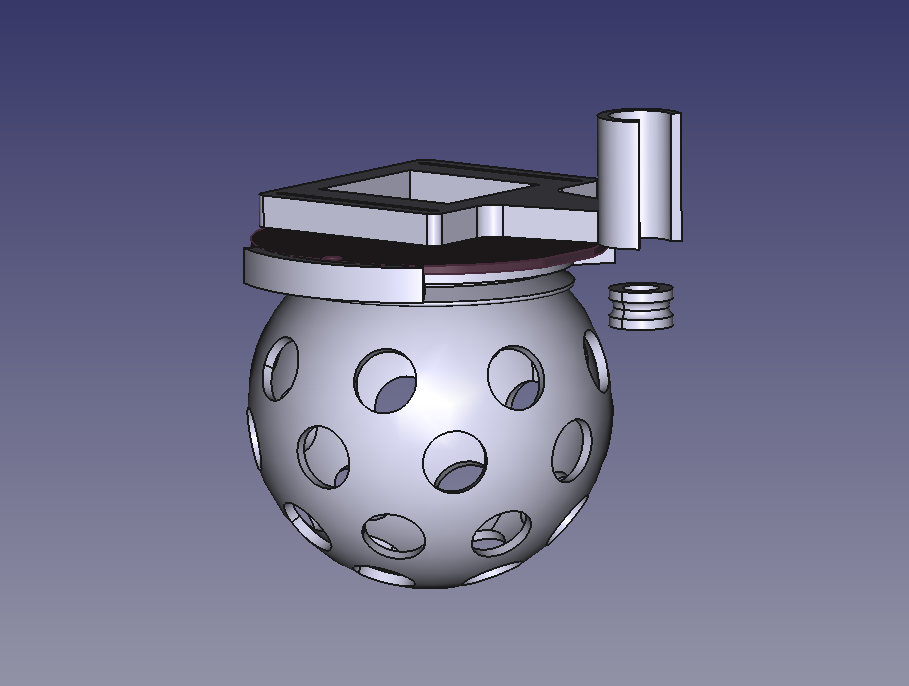

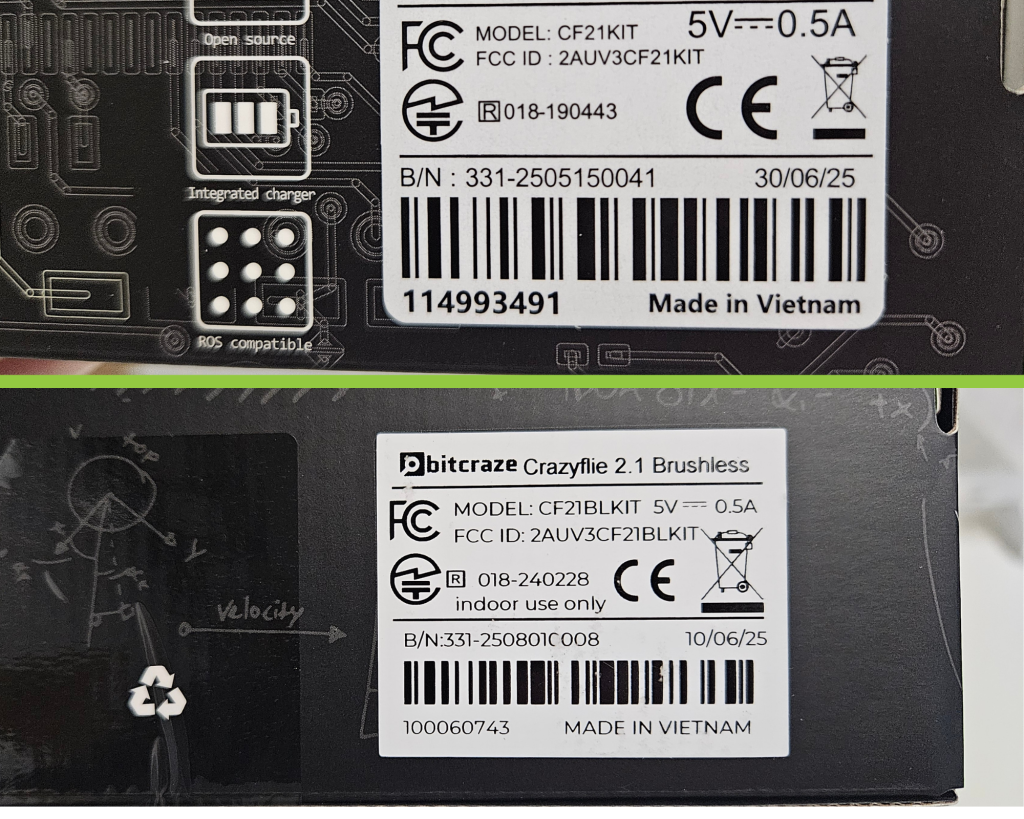

Right now, four of our products are being made in Vietnam: the Crazyflie 2.1+, the Crazyflie 2.1 Brushless, the Flow Deck, and the Crazyradio 2.0. Meanwhile, the Charging Dock is made here in Sweden, and the Lighthouse Base Station comes from Taiwan. That means our production is now spread over four different locations!

We still produce in China as well—that’s where our newest deck will come from, for example. The plan is to gradually add more products made in Vietnam, spreading production across locations, reduce risk, and keep things running smoothly for both us and you. Over time, this will make it easier to maintain stock, respond quickly to demand, and give you a smoother experience no matter where you are in the world.

We also want to make it visible where your products come from when you shop with us. In May, we updated the store to clearly display the country of origin for each item. You can now find this information at the bottom of every product page, so you always know where the item in your cart is being made. For many of you, this small detail helps plan ahead and makes it easier to estimate any extra costs from international shipping.