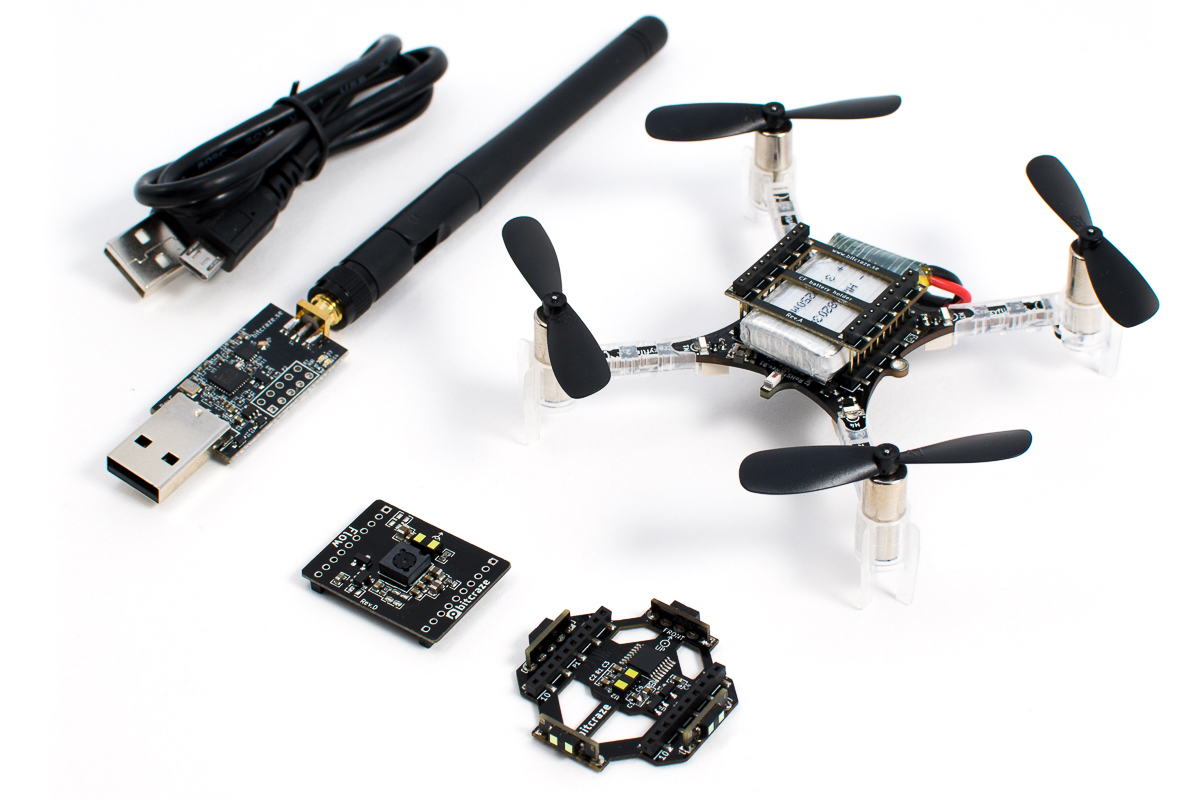

While Crazyflie is nowaday mostly used connected to a computer, we have mobile clients that can be used to fly a Crazyflie using either Bluetooth Low Energy or a Crazyradio with an Android device or an iphone.

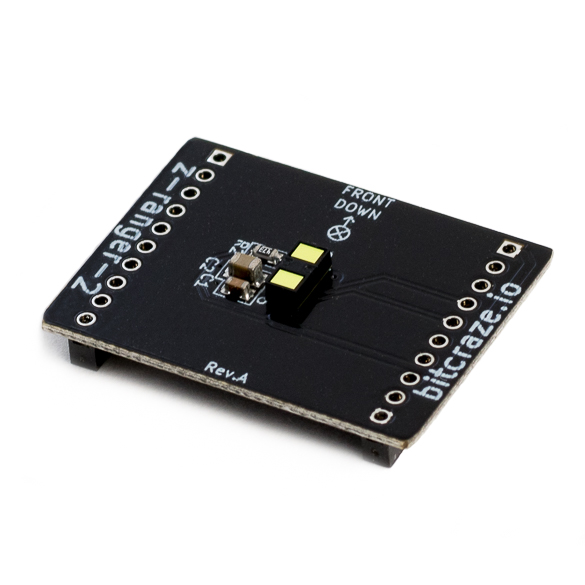

The Android client is currently the most advanced one with support for some decks. The goal of these mobile clients is to at least allow to fly a Crazyflie manually, though a lot more could be done by supporting the various decks of the Crazyflie (for example using the flow deck, one might imagine drawing a trajectory on the phone and having the Crazyflie following it :-).

As for development, we have not been very active in the development of the mobile clients and are relying mostly on contributions. So if you are interested into adding functionalities do not hesitate to drop by the Github page of the Android or iOS clients and to propose functionalities and pull requests.

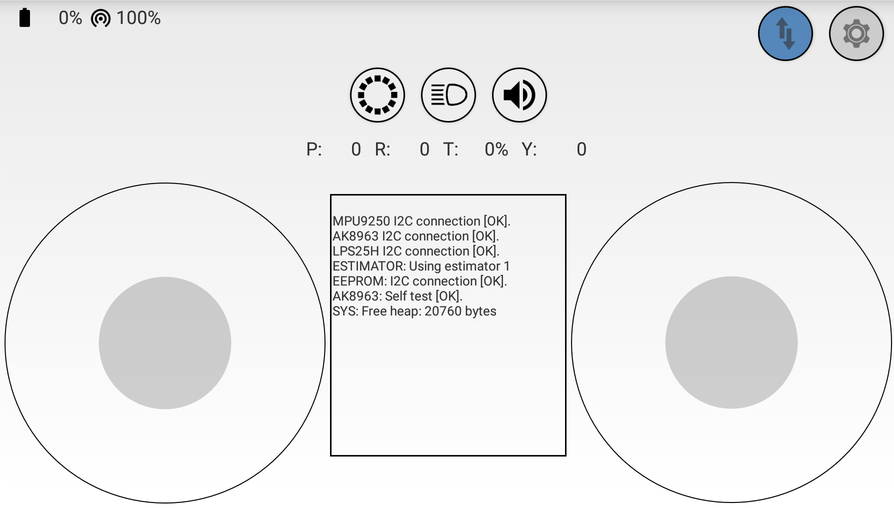

Android client

In 2018, Fred the maintainer of the Android client, has worked hard to stabilize the current app and solve the last few bugs and problem in the current app. A new version was released last week that incorporate all the fixes.

Last years the Android client has seen big internal changes including separating all Crazyflie protocol handling in a separate java library. All these changes will make it easier to implement new functionality in the future and to make the functionality available to android as well as, to any Java program using the Crazyflie java lib.

Iphone client

The iPhone client has seen much less activity in 2018. It has been kept updated with the new versionsd of the Swift language and have seen some bugfixes, all thanks to Github contributors.

There have been reports of a couple of pretty bad bugs that have appeared in the latest release, as soon as these bugs are fixed we plan to release a new version of the iPhone client. The new version will also include the possibility to control the Crazyflie by tilting the phone, and with the bug fixes in place we should be off for a good start of 2019.

Windows client

The Windows UAP Crazyflie client is the least advance of all the mobile Clients. It has the particularity to work on Windows 10 for computers as well as for Phones. This makes it the only implementation of a Bluetooth low energy Crazyflie client for computers. However, Windows 10 for phones being pretty much dead now, the future of this client might be more on the Computer side if any.

Anyway, if anyone is interested in improving the Windows client, we will gladly test and merge pull requests when they come.