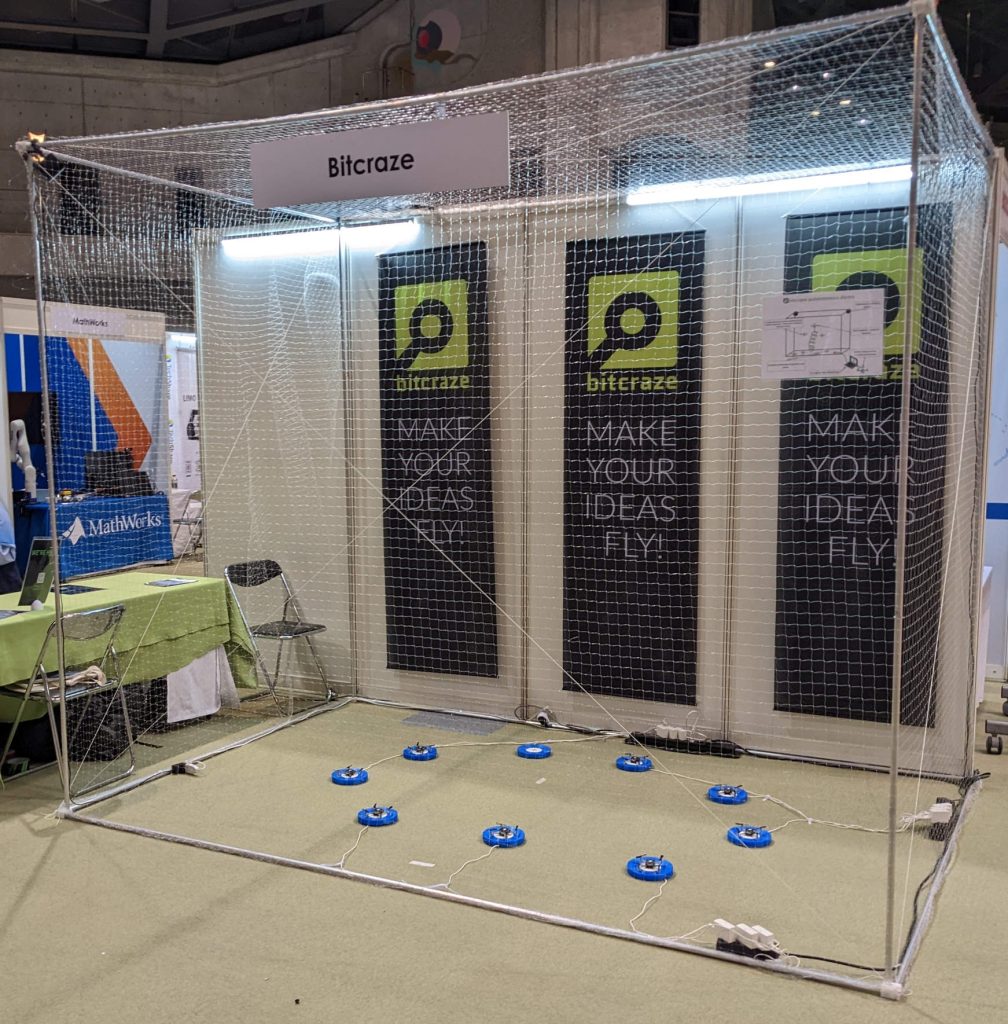

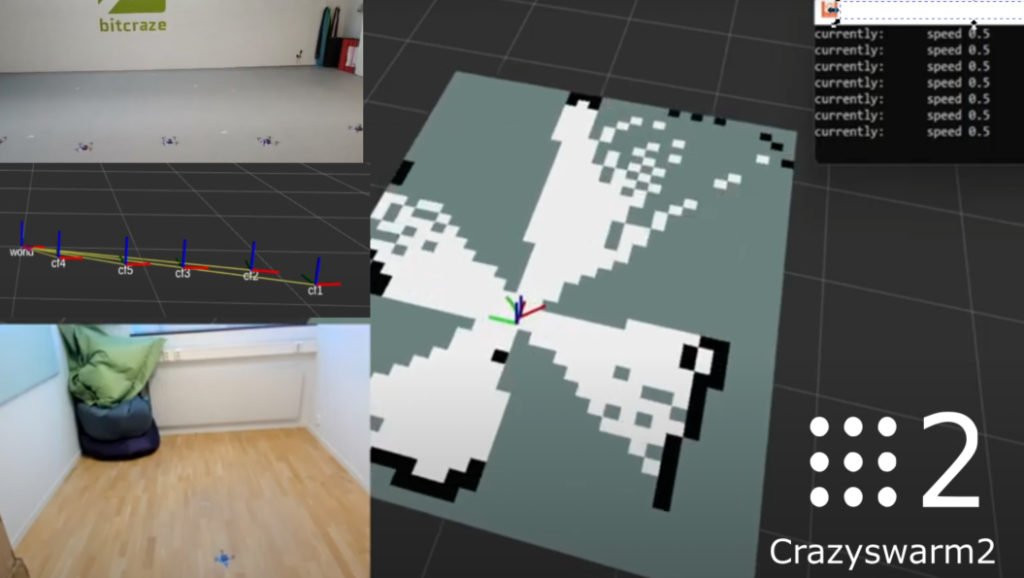

This year, the traditional Christmas video was overtaken by a big project that we had at the end of November: creating a test show with the help of CollMot.

First, a little context: CollMot is a show company based in Hungary that we’ve partnered with on a regular basis, having brainstorms about show drones and discussing possibilities for indoor drones shows in general. They developed Skybrush, an open- source software for controlling swarms. We have wanted to work with them for a long time.

So, when the opportunity came to rent an old train hall that we visit often (because it’s right next to our office and hosts good street food), we jumped on it. The place itself is huge, with massive pillars, pits for train maintenance, high ceiling with metal beams and a really funky industrial look. The idea was to do a technology test and try out if we could scale up the Loco positioning system to a larger space. This was also the perfect time to invite the guys at CollMot for some exploring and hacking.

The Loco system

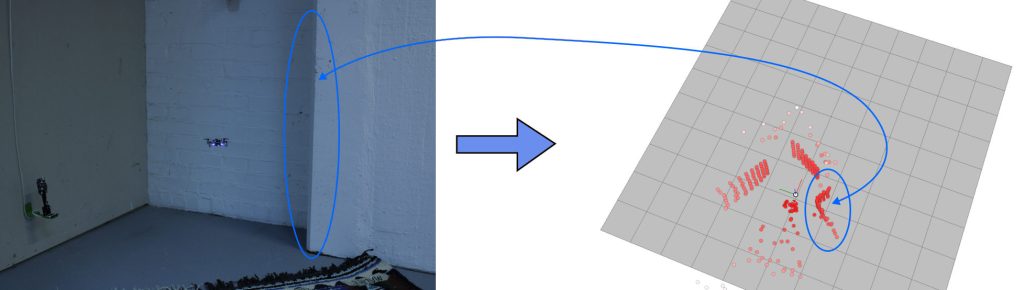

We added the TDoA3 Long Range mode recently and we had done experiments in our test-lab that indicate that the Loco Positioning systems should work in a bigger space with up to 20 anchors, but we had not actually tested it in a larger space.

The maximum radio range between anchors is probably up to around 40 meters in the Long Range mode, but we decided to set up a system that was only around 25×25 meters, with 9 anchors in the ceiling and 9 anchors on the floor placed in 3 by 3 matrices. The reason we did not go bigger is that the height of the space is around 7-8 meters and we did not want to end up with a system that is too wide in relation to the height, this would reduce Z accuracy. This setup gave us 4 cells of 12x12x7 meters which should be OK.

Finding a solution to get the anchors up to the 8 meters ceiling – and getting them down easily was also a headscratcher, but with some ingenuity (and meat hooks!) we managed to create a system. We only had the hall for 2 days before filming at night, and setting up the anchors on the ceiling took a big chunk out of the first day.

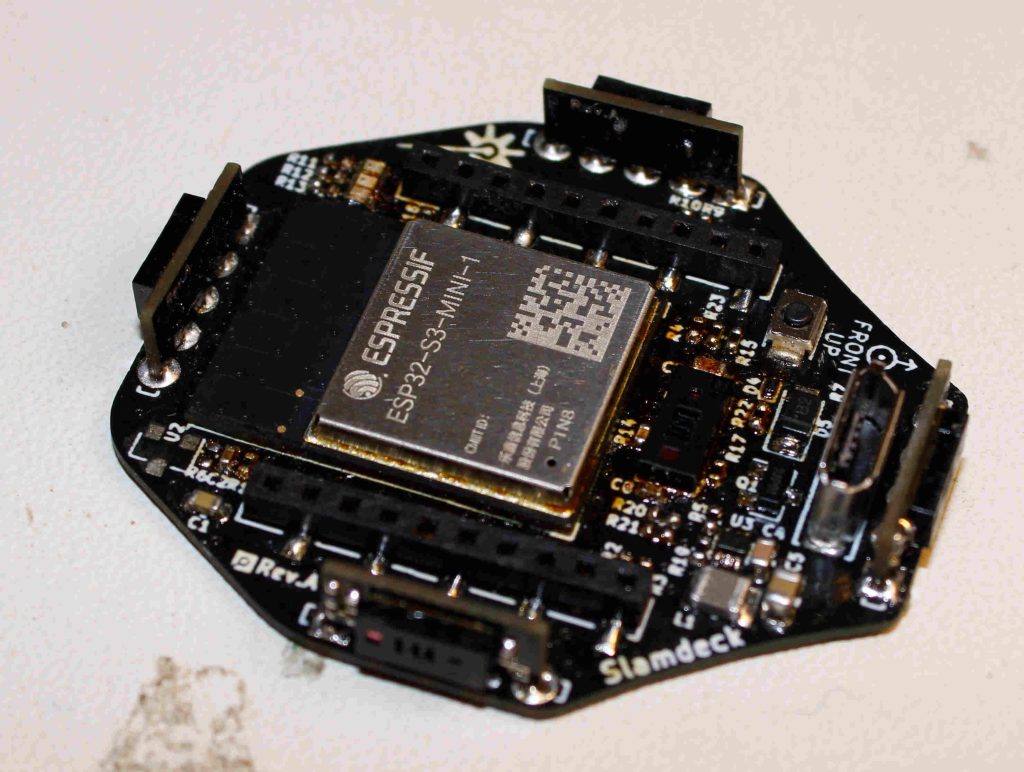

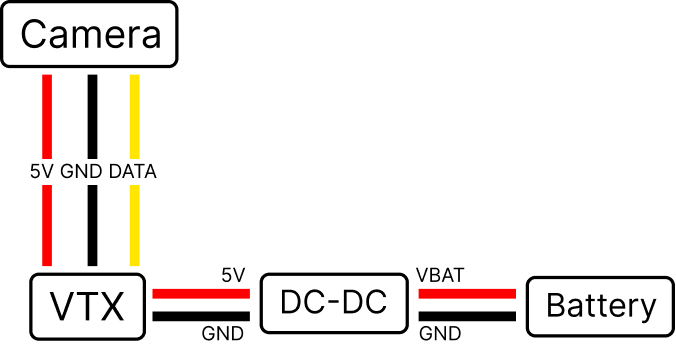

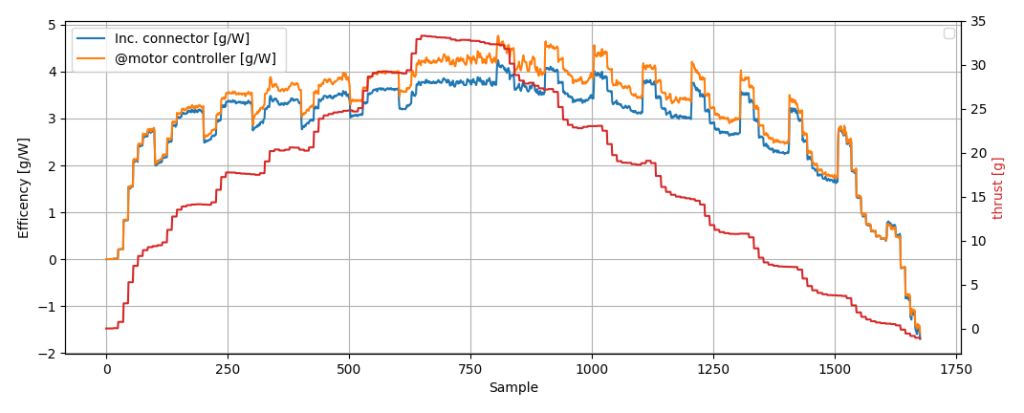

Drone hardware

We used 20 Crazyflie 2.1 equipped with the Loco deck, LED-rings, thrust upgrade kit and tattu 350 mAh batteries. We soldered the pin-headers to the Loco decks for better rigidity but also because it adds a bit more “height-adjust-ability” for the 350 mAh battery which is a bit thicker then the stock battery. To make the LED-ring more visible from the sides we created a diffuser that we 3D-printed in white PLA. The full assembly weighed in at 41 grams. With the LED-ring lit up almost all of the time we concluded that the show-flight should not be longer than 3-4 minutes (with some flight time margin).

The show

CollMot, on their end, designed the whole show using Skyscript and Skybrush Studio. The aim was to have relatively simple and easily changeable formations to be able to test a lot of different things, like the large area, speed, or synchronicity. They joined us on the second day to implement the choreography, and share their knowledge about drone shows.

We got some time afterwards to discuss a lot of things, and enjoy some nice beers and dinner after a job well done. We even had time on the third day, before dismantling everything, to experiment a lot more in this huge space and got some interesting data.

What did we learn?

Initially we had problems with positioning, we got outliers and lost tracking sometimes. Finally we managed to trace the problems to the outlier filter. The filter was written a long time ago and the current implementation was optimized for 8 anchors in a smaller space, which did not really work in this setup. After some tweaking the problem was solved, but we need to improve the filter for generic support of different system setups in the future.

Another problem that was observed is that the Z-estimate tends to get an offset that “sticks” and it is not corrected over time. We do not really understand this and will require more investigations.

The outlier filer was the only major problem that we had to solve, otherwise the Loco system mainly performed as expected and we are very happy with the result! The changes in the firmware is available in this, slightly hackish branch.

We also spent some time testing maximum velocities. For the horizontal velocities the Crazyflies started loosing positioning over 3 m/s. They could probably go much faster but the outlier filter started having problems at higher speeds. Also the overshoot became larger the faster we flew which most likely could be solved with better controller tuning. For the vertical velocity 3 m/s was also the maximum, limited by the deceleration when coming downwards. Some improvements can be made here.

Conclusion is that many things works really well but there are still some optimizations and improvements that could be made to make it even more robust and accurate.

The video

But, enough talking, here is the never-seen-before New Year’s Eve video

And if you’re curious to see behind the scenes

Thanks to CollMot for their presence and valuable expertise, and InDiscourse for arranging the video!

And with the final blogpost of 2022 and this amazing video, it’s time to wish you a nice New Year’s Eve and a happy beginning of 2023!