Over time the scope of Crazyflie has changed a lot. At first, Crazyflie was “just flying” with the only possible control was attitude (roll, pitch, yaw) and thrust setpoint sent from the Radio. Soon after, autonomous flight was investigated, first by implementing position controller outside Crazyflie and then, over time, moving position control on-board and sending position or trajectory setpoint to the Crazyflie. Now that the Crazyflie has good position control, the next step is to implement autonomous behavior and until now the most practical way is to do this from code running in an external computer. Similarly to what happened in the history of position control (first off-board and now onboard), it needs to be possible implement autonomous behavior in the Crazyflie itself. This blog post is about two newly implemented capabilities that will allow to implement automation in the Crazyflie firmware in an easy and maintainable way, namely the ‘App layer’ and the P2P communication.

App layer

The “App layer” is a term we have been using internally in Bitcraze to describe a set of functionalities that would allow to implement code in the Crazyflie. This includes the infrastructure to compile and maintain external code running in the Crazyflie as well as a set of API to control flight and behavior from C code rather than from radio communication.

Last week we implemented the first step of the App layer: the infrastructure part. It is now possible to build the Crazyflie firmware out-of-tree. This means that it is now possible, from a project, to point to the Crazyflie firmware folder and to compile a firmware from the project folder without touching the Crazyflie firmware folder. Practically it allows to create a git-repos implementing custom firmware code that has Crazyflie firmware as a sub-repos. This makes the maintenance of custom firmware code much easier than maintaining a branch of the Crazflie firmware as previously required.

A second piece that has been implemented is the app entry-point. It allows to start running code by just creating an “appMain()” function. The function will be called from a dedicated FreeRTOS task after the Crazyflie has initialized and started. This should make it much easier to get started.

For an example, we have extracted the multiranger push demo into a standalone git repos. This demonstrate the implementation of autonomous behavior using these new infrastructures.

Peer to Peer communication

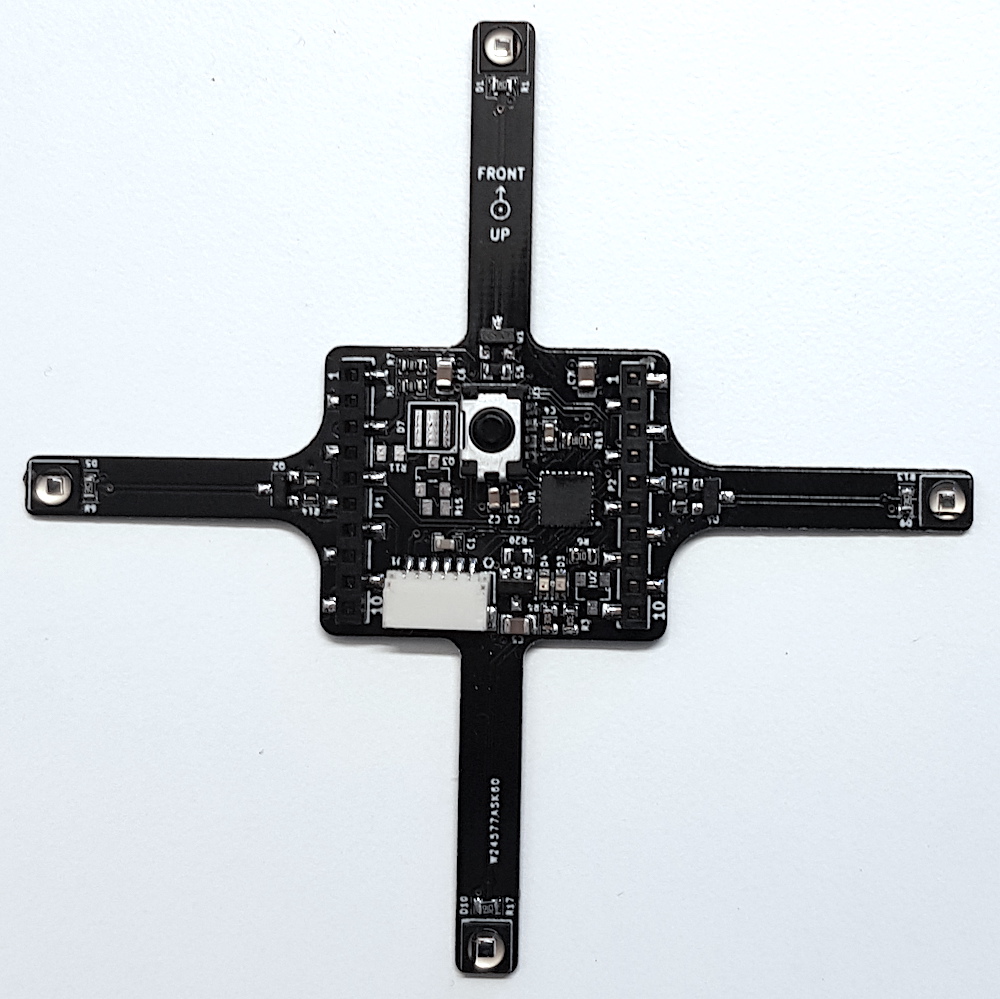

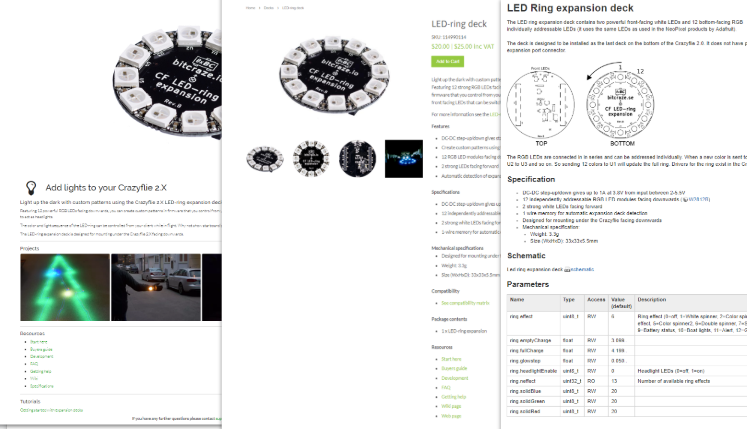

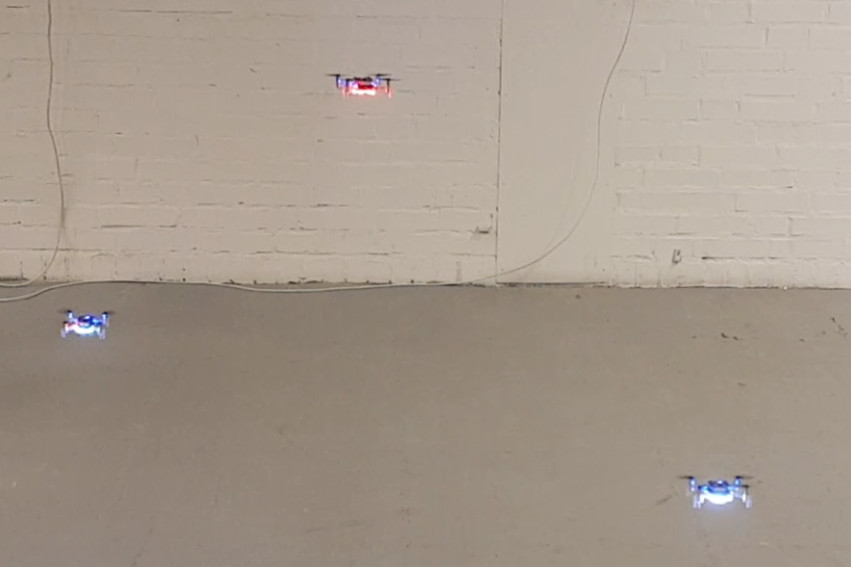

The Crazyflie has been used for many research related to swarming, some examples are the crazyswarm project or the work done by Carnegie Mellon University. However, it is now time to turn it up a notch. On the forum and on the Github repository, there has been several request of enabling direct peer to peer communication to the Crazyflies. Now we finally found time to work on it and implement some basic functionality on the NRF and STM side of the firmware.

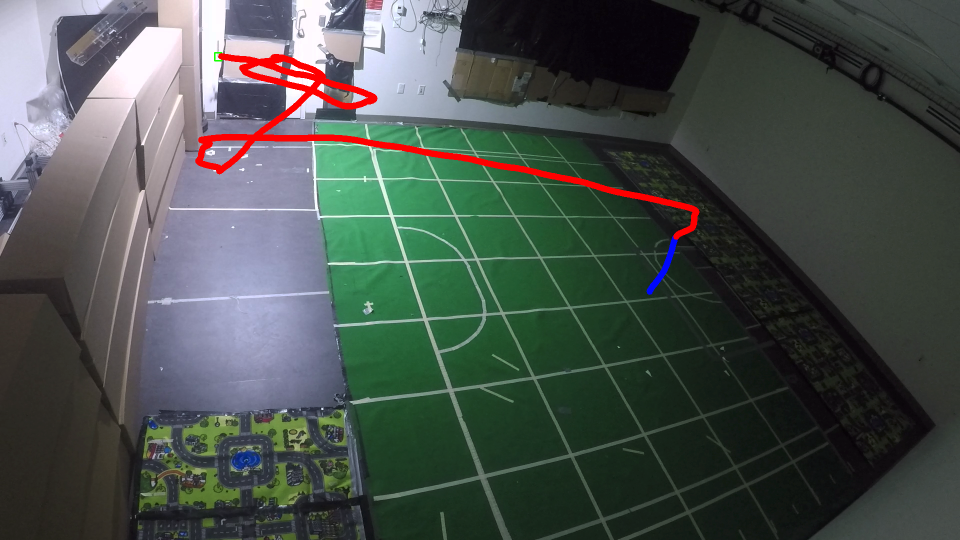

Currently, it is possible to send and receive a P2P packet in broadcast mode from the STM directly (see how to do this in the documentation). This enables data to be send from one Crazyflie to another with a maximum data size of 60 bytes. We were able to stress-test this with our test rig, by sending broadcast messages in a round-robin-kind of fashion, where the broadcast message was transferred through 10 Crazyflies in 10-20 ms. Even-though the current implementation is for now very minimal, we were able to fix some existing issues in the radiolink framework.

We will not stop there, as we are hoping to implement a communication system similar to how the CTRP protocol has been implemented. We are getting a lot of help by our active community members, so check out this github issue to be up-to-date with the current discussion.