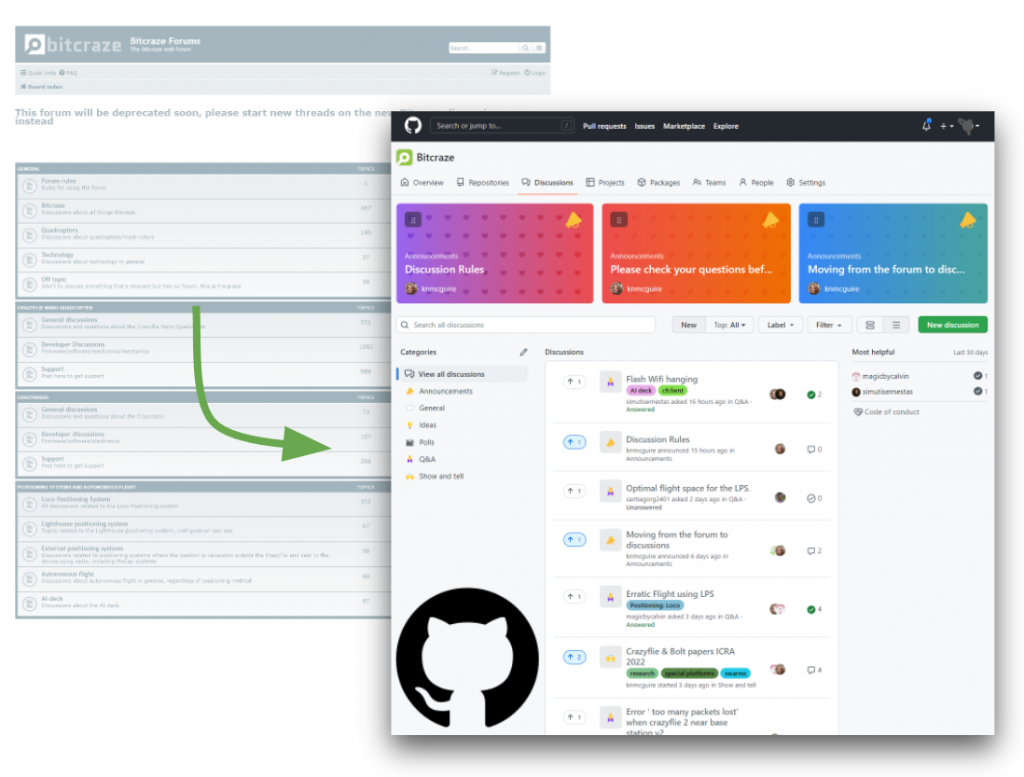

We have already announced it a bit on social media, but in this blogpost we are announcing it officially: we are moving our support to Github Discussions which can be reached by going to discussions.bitcraze.io. We will deprecate our old forum and lock it for new thread creation and user registration starting from week of the 6th of June.

Don’t worry! We don’t have any plans of removing the actual content of the old forum as this is much too valuable for support. But for most communication, support and discussions, we will only be using Github Discussions.

The Reasoning

Technically, there is nothing really wrong with the old forum! Bitcraze has used this phpBB-based platform ever since 2013 and has helped tremendously in support in all those years until now. For a long while, it also has been an active sharing medium next to support, where users could share their cool Crazyflie projects. So it was more than a support channel… it was a community watering-hole! Unfortunately, the backbone of the forum has not progressed in functionality, the categories style defeated it’s purpose and we found we had to constantly switch back and forth between the forum and Github issue list on a daily basis. So we have decided it is time for something else.

Moreover, the last few years we have noticed that the sharing of cool projects became significantly less, no more discussions were initiated and the forum was mostly used for only support and questions. As we missed seeing cool projects and discussing the Crazyflie (also due to Corona), we tried to open up a Bitcraze Discord channel (see this blogpost) to initiate more discussions and sharing as a supplement to the forum. Unfortunately, it did not really take off in terms of activity, and the content is not searchable without a discord account. After a unfortunate hack-attack last year we removed the invite link from our website.

We did get feedback that having 2 platforms separated for support and community was confusing to the users, as they didn’t know where to ask a question anymore. The ideal situation would then to be choose another platform where we can have all on there. Discourse we had our eyes on for a while, which has been used by other open source projects, and has integration support for Github so users didn’t have to make yet another account. Github itself introduced their Discussions feature 1.5 year ago and it was adopted by Crazyswarm (1 and 2). At that time it was only possible to have one discussion tab per repository, and with our 60 (!) repos this didn’t seem to be an option… until recently! Once they released their organization level discussion feature about a 1.5 months ago, we were sold. There is no better Github integration than being on Github itself and with our many repos, this is something we definitely need.

How to use Discussions?

The forum and discussions are quite different in style which takes a little getting used to. The old forum used a classic ‘thread’, which shows exactly the chronological way of the conversation. Discussions uses an approach similar to Stack Overflow, where you can also reply on intermediate answers in that thread.

Where to start a discussion?

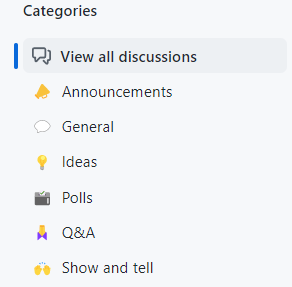

If you see the discussion page, you notice a couple of categories. These are still the standards as given by github, but we think these will work for us as well:

- Announcements: Here is where Bitcraze places announcements in regards of discussions. You can not add announcements yourself but you can reply to them, but do not start a generic support question here.

- General: Just for generic discussions and saying hi!

- Ideas: If you have any ideas for enhancements for the Bitcraze ecosystem, place them here!

- Polls: Same as announcements from Bitcraze, but we will use these to poll a certain opinion.

- Q&A: Here is were all the support questions go! Make sure to read the support rules for this.

- Show & Tell: Show your awesome Crazyflie projects here!

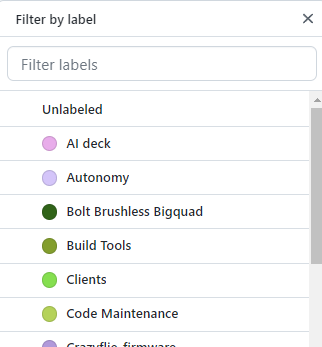

Labels

We first had categories at the forum, which was supposed to separate the discussion in a functional way. In reality, sometimes it was difficult to really separate the issues in those very categories as it overlapped many functionalities. Now in Github discussions, we are able to label these based on the topic, and even add more labels if it indeed overlaps multiple topics. From this, we will also be able to filter out support question about a certain topic which will make it easier for us to have a good look of all support for a certain product or functionality.

So please try to label your support question! But if you haven’t, we will probably do it for you.

Issue or Discussion?

Especially since we are on Github discussions, it might be difficult now to determine when to start an issue at an individual repository and when to start discussion. I really like the explanation that Github itself used to explain the difference:

Discussions are great for questions and ideas that require team communication to make a decision, while Issues are defined pieces of work.

So if you know of a bug/problem, or you want to to propose an enhancement that you know the specific repository of, go ahead and make an issue right in that repo. If you have a problem and you are not sure what is causing it, discussions is the place to go (preferably in Q&A). If there is any ‘pieces of work’ that comes from that discussion, the maintainer can generate an issue in the related repository and link it to the discussion. Other way around, any questions in any issue list that we think is too generic, we will moderate and move it to the organization discussions.

Get involved!

Of course at Bitcraze we will try to help out, but what we really would like is for you to help with answering questions as well! Start up and chat with a topic that you are interested in, or show a small video of your Crazyflie doing something fun. One thing that we would love is the community to be as vibrant as it used to be, were we not only ask support questions, but to discuss and share! It doesn’t have to be big, like you can vote on this Poll to voice your opinion about our move, or vote up / add a smiley to any discussion that you find important.

Also we are planning to have a developer meeting in a few weeks, on the 22nd of June (just before the holidays), so please see the discussion/poll here for more information.