Time flies! 2025 is already drawing to a close, and as has become a Bitcraze tradition, it’s time to pause for a moment and look back at what we’ve been up to during the year. As usual, it’s been a mix of new hardware, major software improvements, community adventures, and some changes within the team itself.

Hardware

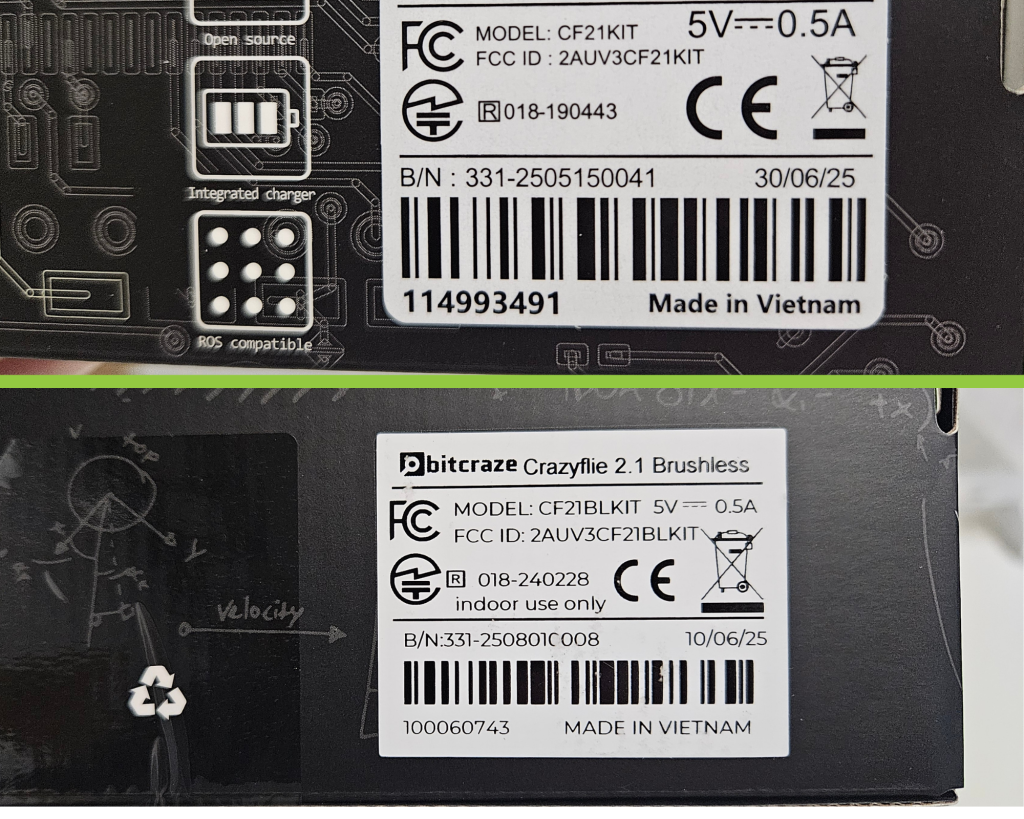

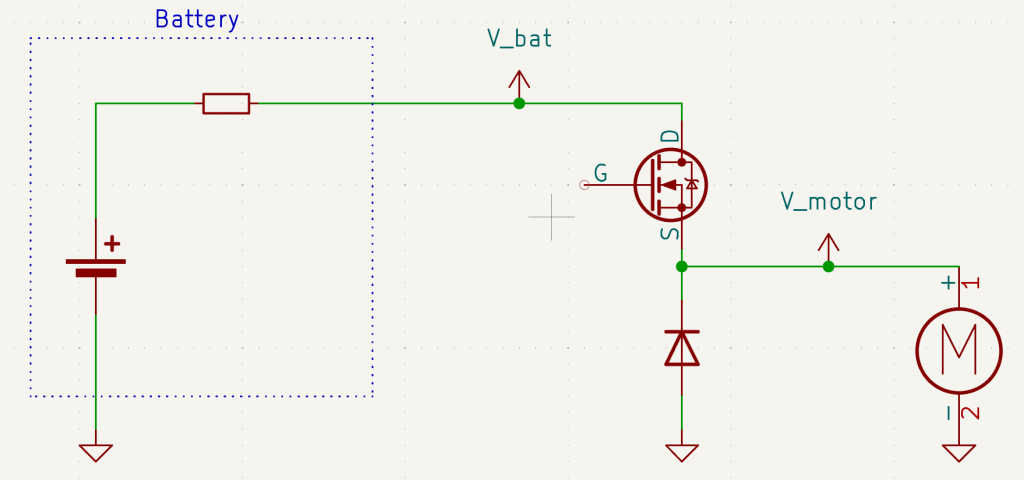

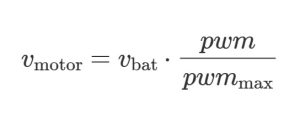

2025 started with a bang, with the release of the Brushless! It was a big milestone for us, and it’s been incredibly exciting to see how quickly the community picked it up and started pushing it in all sorts of creative directions. From more demanding research setups to experimental control approaches, the Brushless has already proven to be a powerful new addition to the ecosystem. Alongside the Brushless, we also released a charging dock enabling the drone to charge autonomously between flights. This opened the door to longer-running experiments and led to the launch of our Infinite Flight bundle, making it easier than ever to explore continuous operation and autonomous behaviors.

Beyond new products, we spent much of the year working on two major hardware-related projects. During the first part of the year, our focus was on expanding the Lighthouse system to support up to 16 basestations. The second part of the year much of our efforts shifted toward preparing the release of the Color LED, which we’re very much looking forward to seeing in the wild.

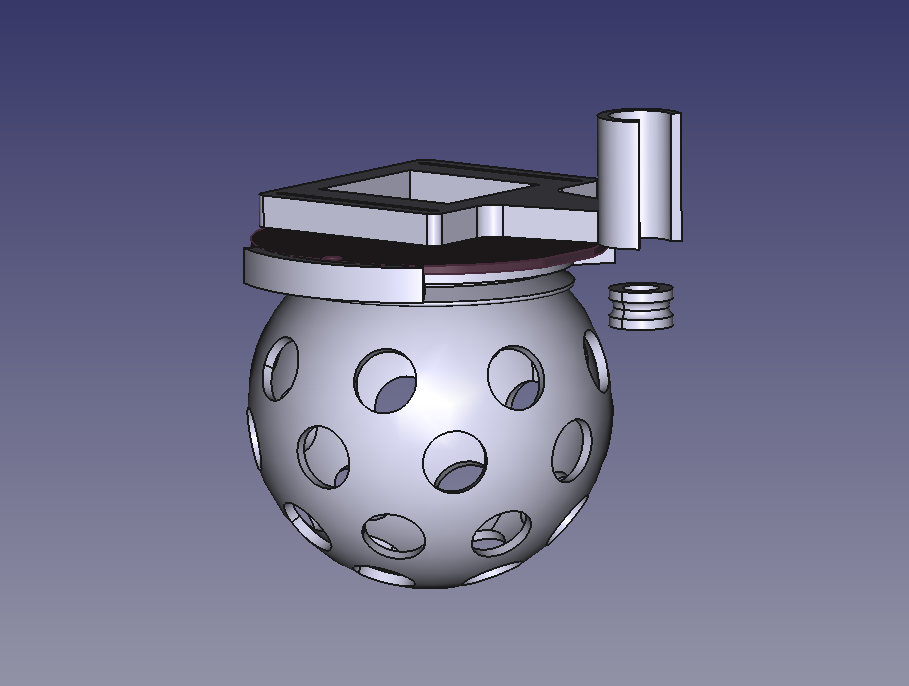

Fun Fridays also got some extra time in the spotlight this year. Aris produced a series of truly delightful projects that reminded us all why we love experimenting with flying robots in the first place. Have you seen the disco drone, or the claw?

Software

On the software side, 2025 brought some important structural improvements. The biggest change was the introduction of a new control deck architecture; which lays the groundwork for better identification and enumeration of decks going forward. This is one of those changes that may not look flashy on the surface, but it will make life easier for both users and developers in the long run.

We also made steady progress with the Rust Lib, moving from Fun Friday to a fully-fleshed tool. It is now an integrated and supported part of our tooling, opening up new possibilities for users who want strong guarantees around safety and performance.

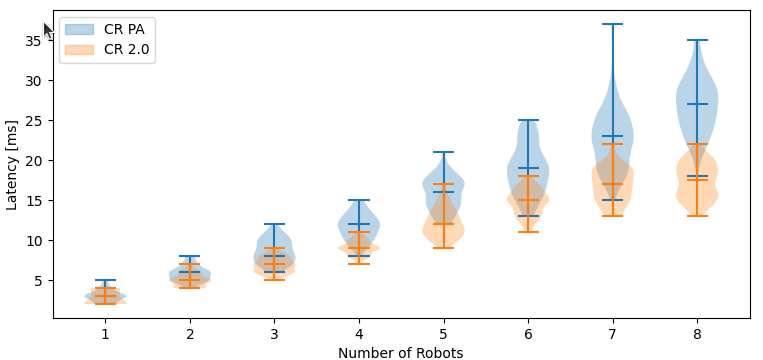

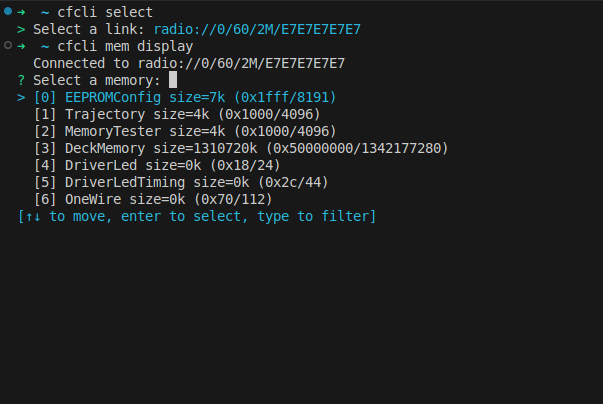

Another long-awaited update this year was an upgrade to the Crazyradio, improving its ability to handle large swarms of Crazyflie™ drones. This directly benefits anyone working with multi-drone setups and helps make swarm experiments more robust and scalable.

Community

It’s fair to say that 2025 was a busy year on the community and events front. We kicked things off, as usual, at FOSDEM, where we hosted a dev room for the first time! A big step for us and a great opportunity to connect with fellow open-source developers.

Later in the year, we made our first trip back to the US since the pandemic. This included sponsoring the ICUAS competition, and hosting a booth at ICRA Atlanta, both of which were fantastic opportunities to meet researchers and practitioners working with aerial robotics. We also presented a brand-new demo at HRI.

In September, KTH hosted a “Drone gymnasium“, giving students hands-on access to Crazyflies and encouraging them to explore new robotic experiences. Seeing what happens when students are given open tools and creative freedom is always inspiring, and this event was no exception.

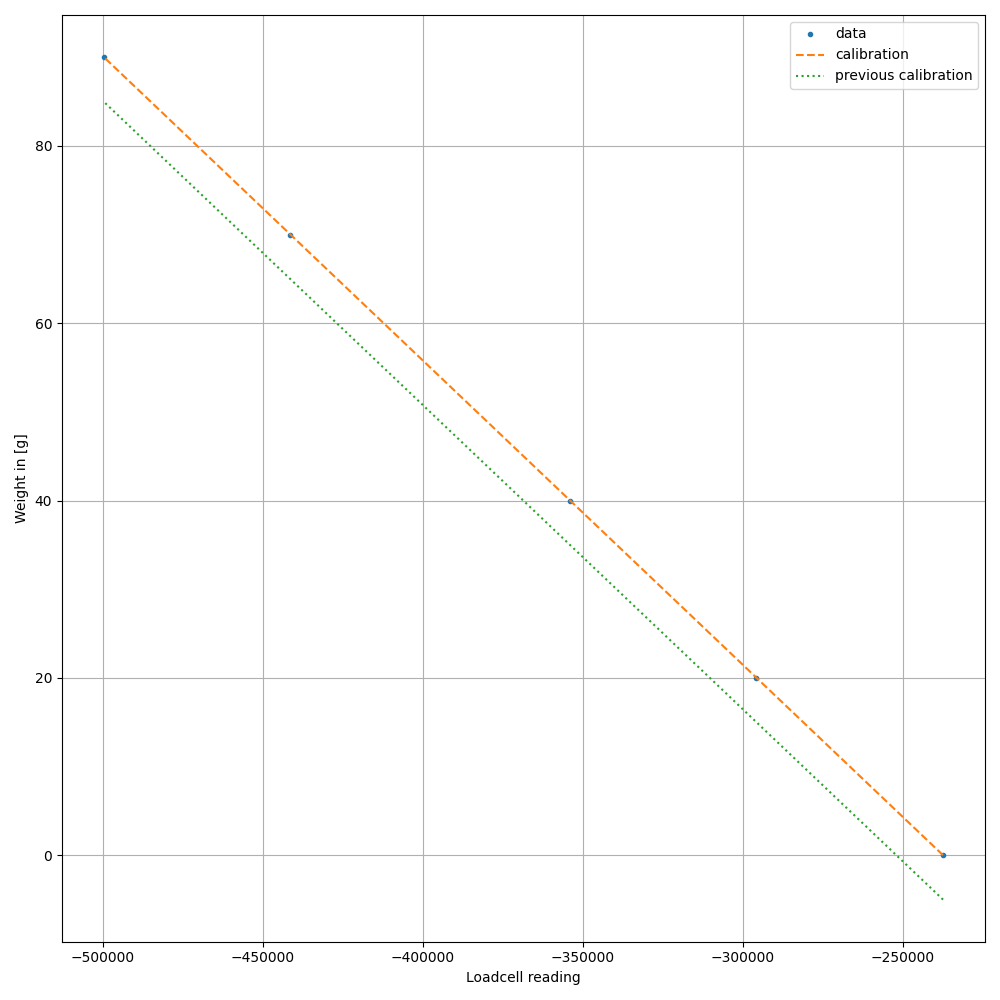

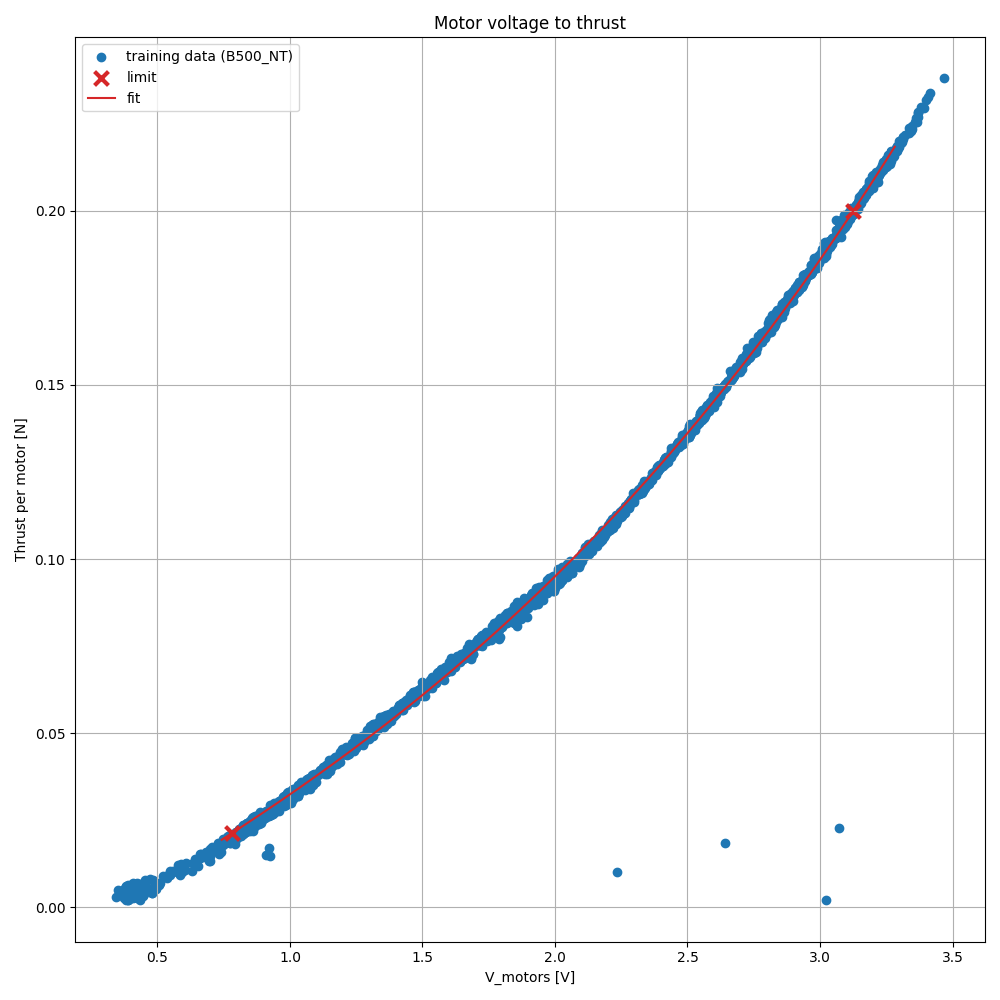

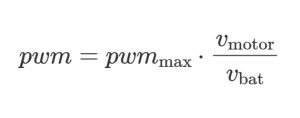

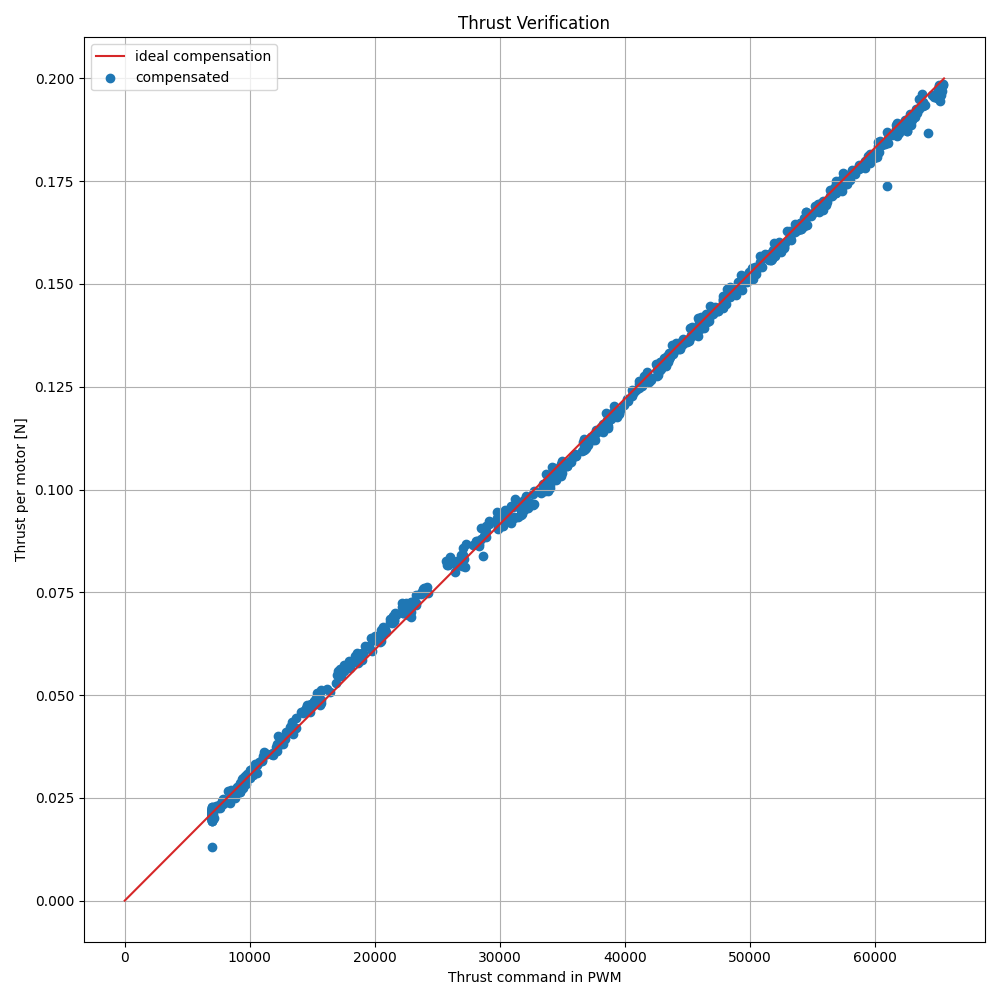

2025 was also marked by continued and valuable community contributions. From improvements and bug fixes to features like battery compensation, these contributions play a huge role in shaping the platform and pushing it forward.

Team

Behind the scenes, Bitcraze itself continued to grow. This year brought both change and expansion within the team. Tove moved on to new adventures in Stockholm, and while we’ll miss her, we were also happy to welcome a record four new team members!

Aris ansitioned from intern to full-time developer, Fredrik then joined as a growth and partnership guru, followed by Enya as an application engineer. Hampus was the last to join us as an administrator. With these new faces, the team is larger — and stronger — than ever.

All in all, 2025 has once again been an exciting, intense, and rewarding year for Bitcraze. Thank you to everyone in the community who flew with us, built on our tools, reported issues, shared ideas, and showed us what’s possible with tiny flying robots. We can’t wait to see what 2026 brings.