The High-level Commander has been part of the Crazyflie firmware since the 2018.10 release. In combination with a positioning system, it can fly the Crazyflie along a trajectory that is either defined in the firmware or uploaded through the python lib. It originates from the Crazyswarm project and we have used it in various demos since it is possible to make trajectories that are very fluid and looks really cool. The trajectories are defined as 7th degree polynomials describing segments executed one after each other.

The controller gives full control of position, velocity, acceleration and jerk, the only problem is that it is non-trivial to generate the polynomials. We have wanted to simplify the creation of trajectories for a long time and have finally had some time to play with it. In this blog post we will describe how it can be done with Bezier curves and show some examples.

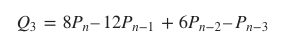

Each segment in a High-level commander trajectory is defined by four 7 degree polynomials, one for x, y, z and yaw. There is also a scaling parameter that tells the controller the time scale to use when executing the segment. Using polynomials of degree 7 makes it possible to design trajectories that are continuous in position, velocity, acceleration and jerk when changing from one segment to the next, which is important to get a smooth and controlled flight.

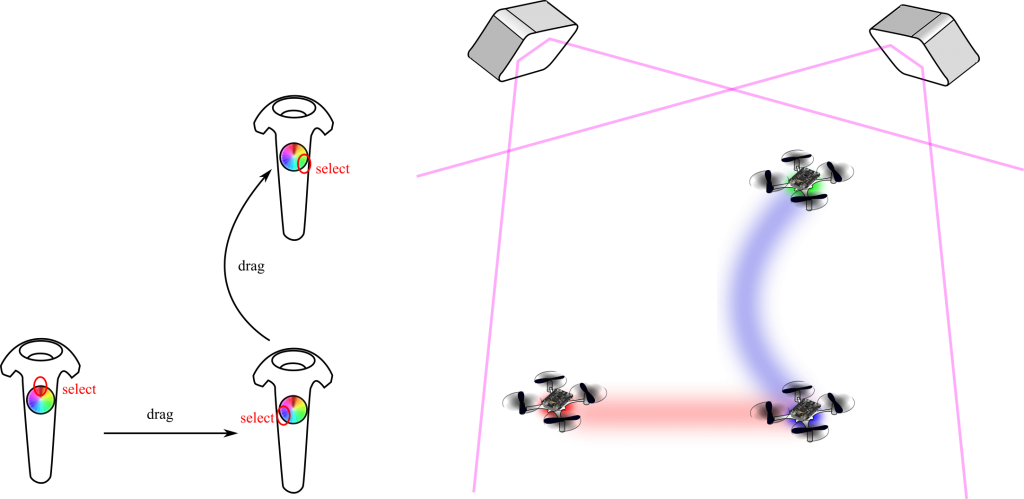

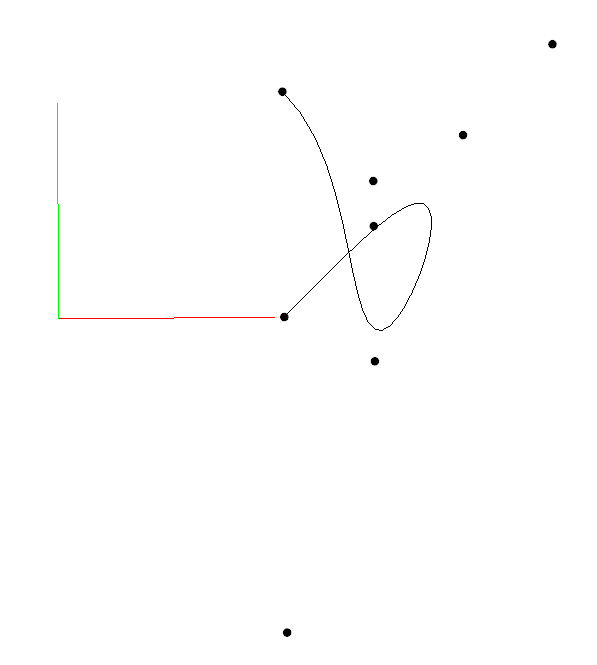

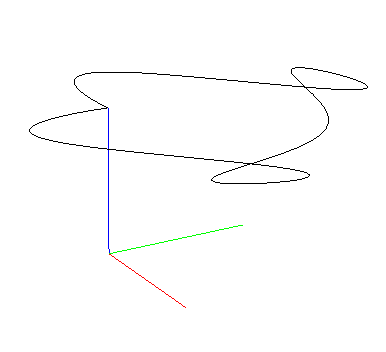

Bezier curves are common in many graphics applications and are probably known to most users. They are parametric curves defined by control points, usually three or four. Bezier curves can also be expressed as a polynomial, and this is what we will use in this case. To get a correct mapping to the desired polynomials we need some more control points and will use 8 per segment. The basic idea is to define the trajectory as Bezier curves and make sure the control points are placed in such a way that the continuity requirements are satisfied.

On this page from University of Cambridge, there is a good explanation on continuity across the joins between curves and formulas for c0, c1 and c2 continuity. We also need c3 continuity which can be calculated in the same manner

With these formulas it is possible to set the handles of the Bezier curves to make sure we get a smooth ride.

We have added a python example that implements the ideas above. You can find it in crazyflie-lib-python/examples/positioning/bezier_trajectory.py. The design is based on Nodes that represents the connection points between bezier curves (called Segments). The Nodes has a set of handles that are shared between the Segments that use the Node. If not all handles are set the implementation will set them to appropriate values, see the comments in the code for more details. The Node API only allows the user to set handles on one of the Segments, the handles for the other segment are automatically set to generate a continuous trajectory.

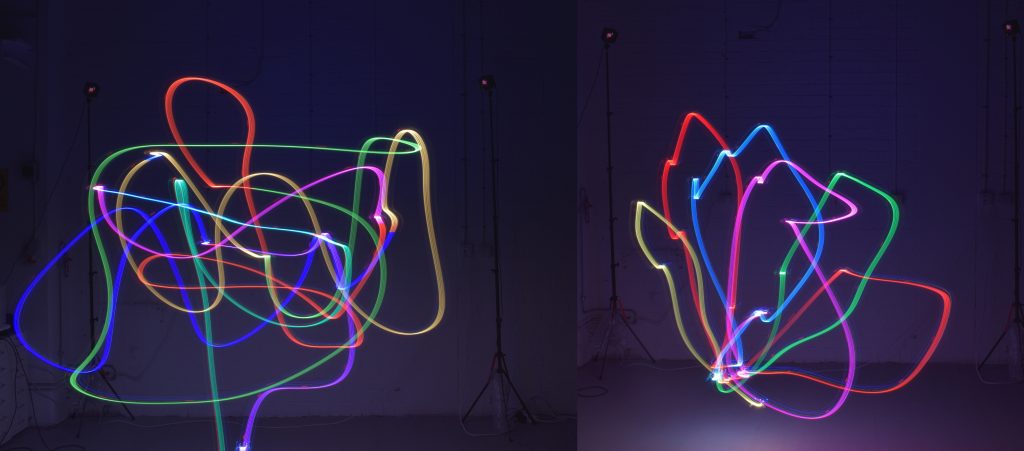

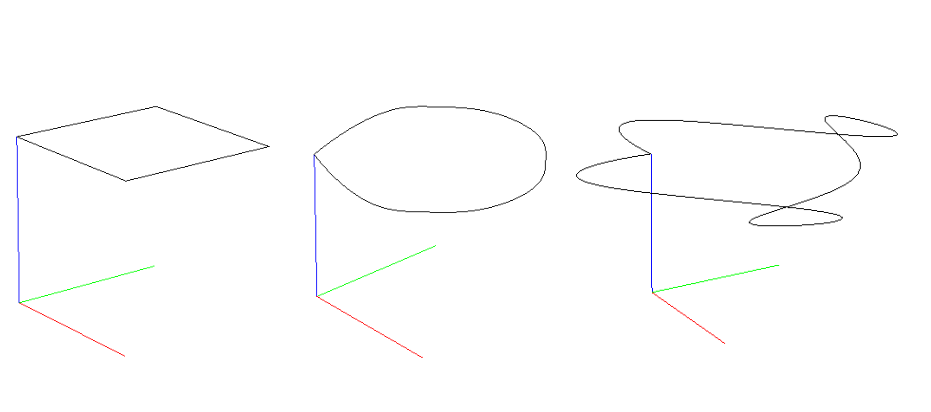

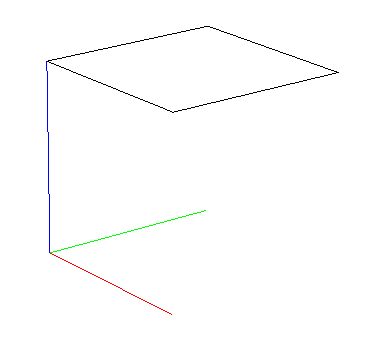

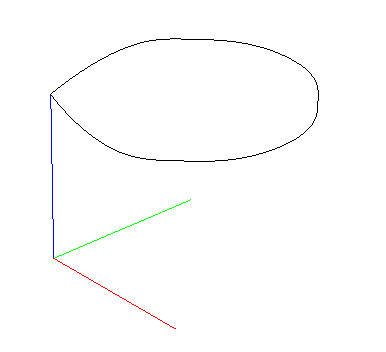

The example uses nodes in the corners of a square and contains three parts:

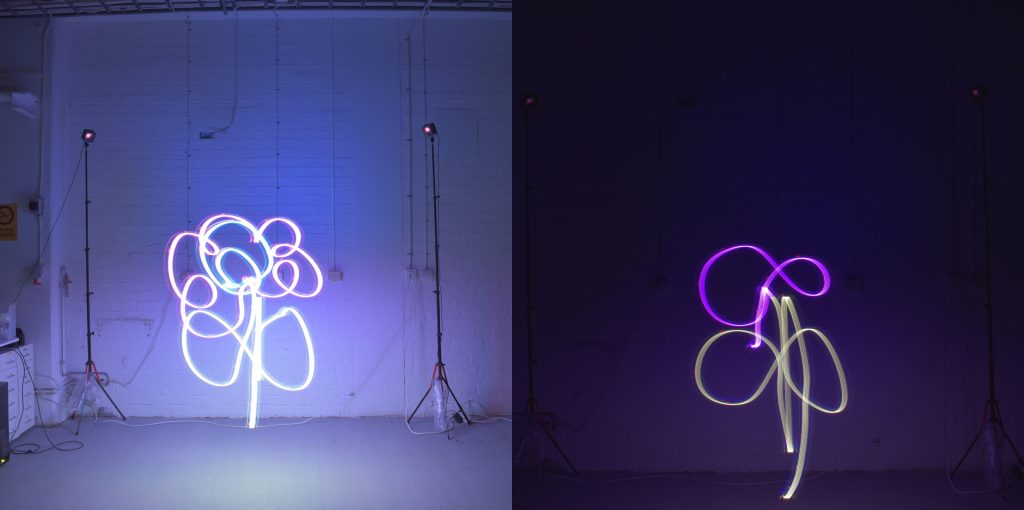

Velocity in the nodes. A fluid motion all the way around.

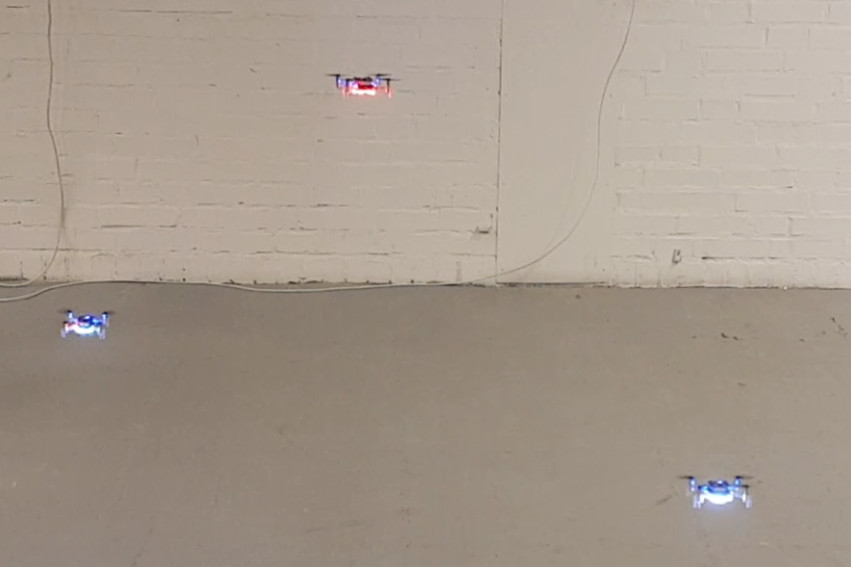

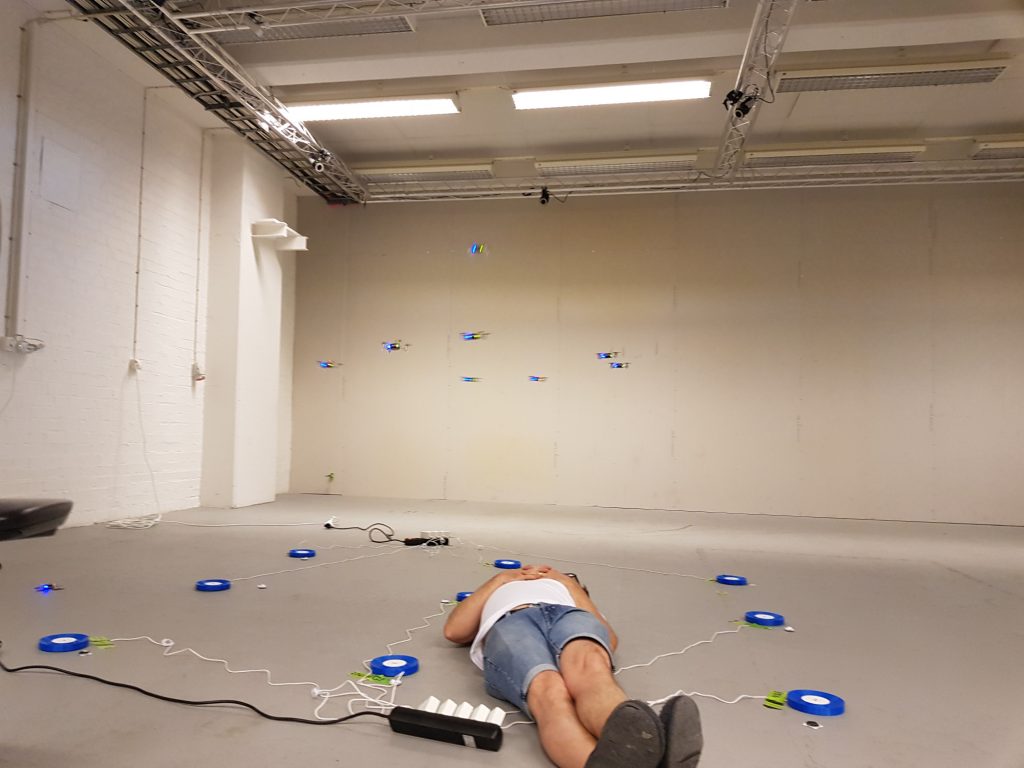

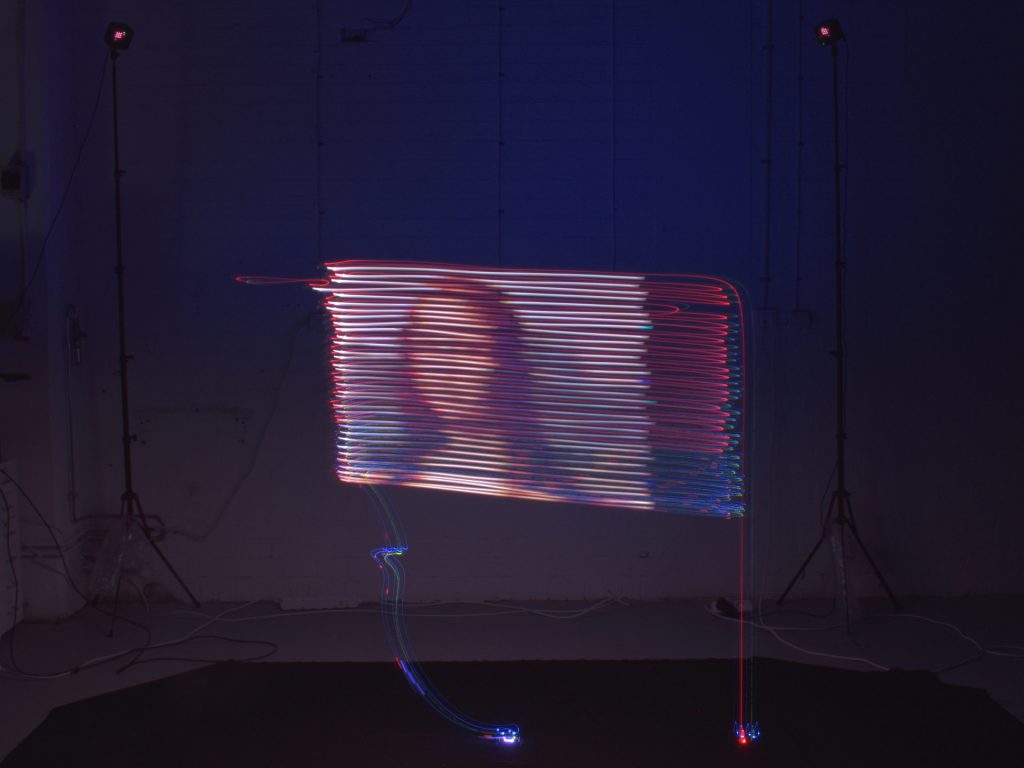

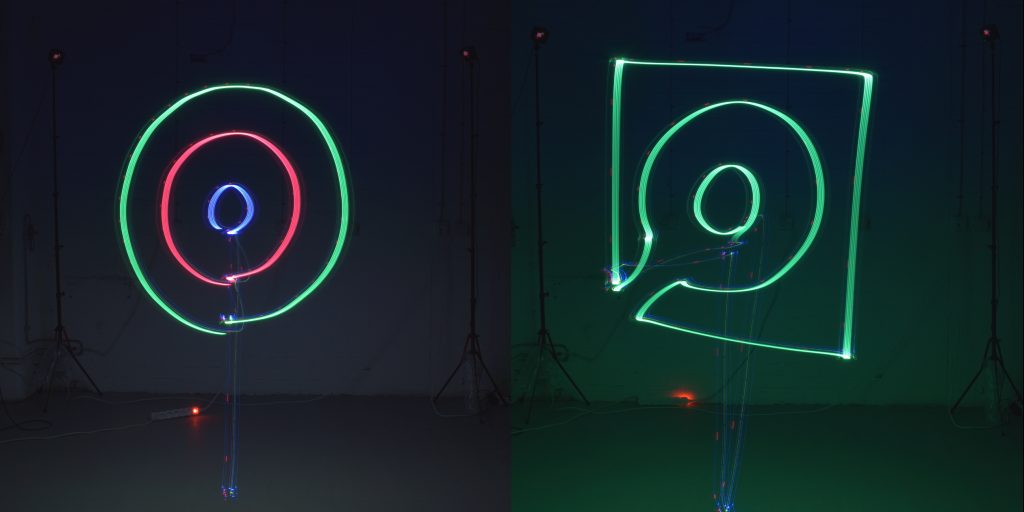

Finally a video showing the full sequence, we use the Lighthouse for positioning.