During the last year we, like many others, have had to deal with new challenges. Some of them have been personal while some have been work related. Although it’s been a challenge for us to distribute the team, we’ve tried to refocus our work in order to keep making progress with our products.

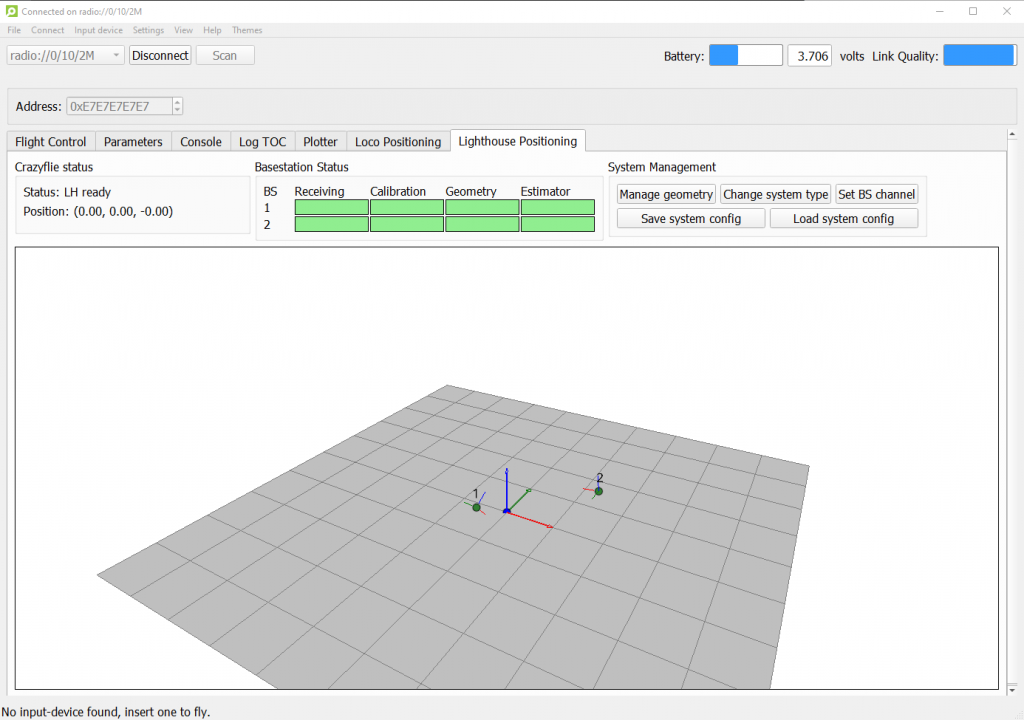

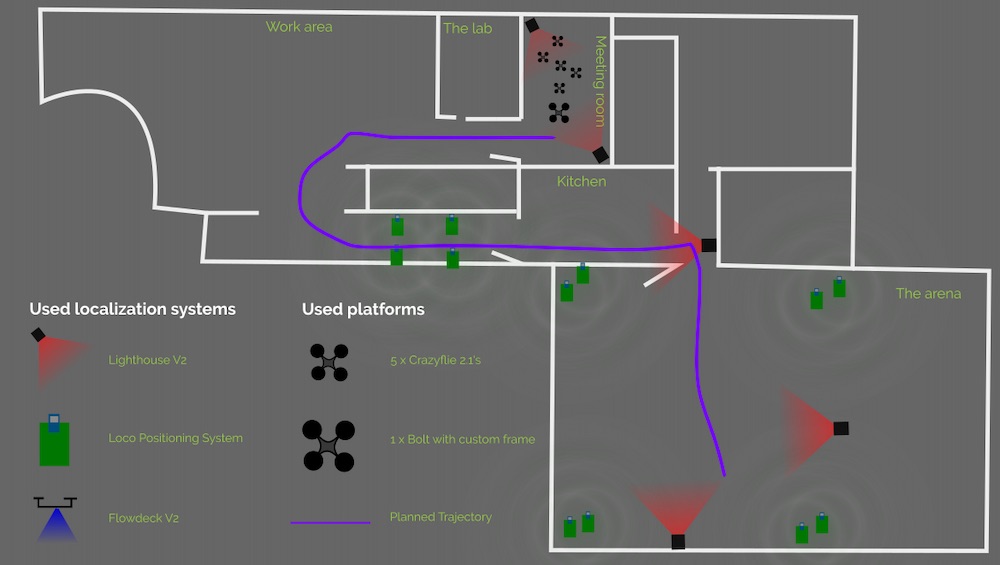

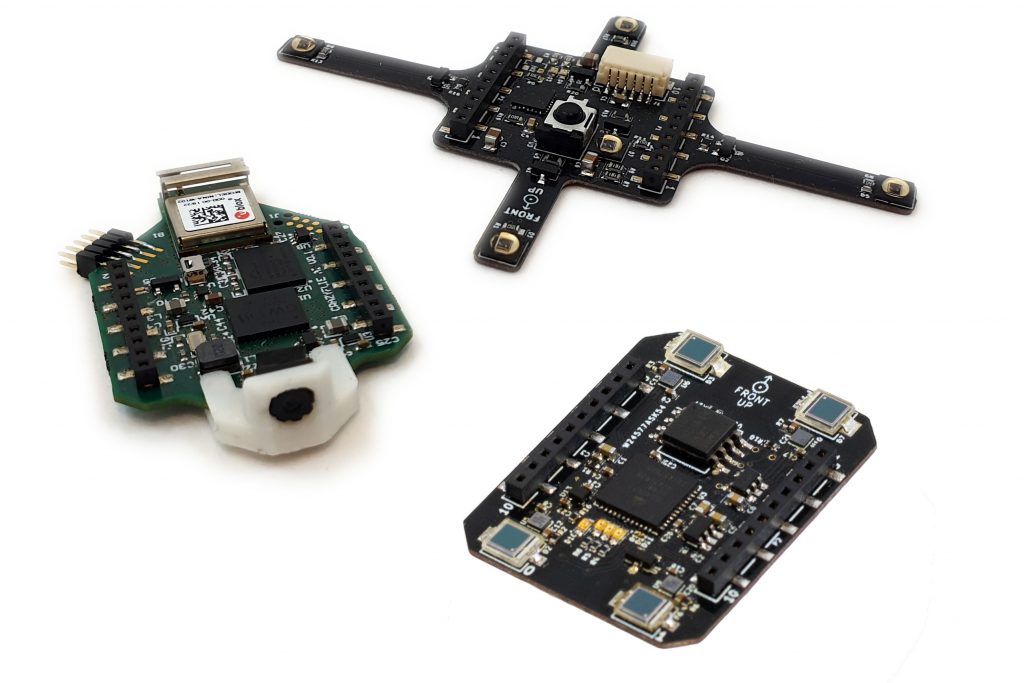

Our users have of course had challenges of their own. Having a large user-base in academia, we’ve seen users having restricted physical access to hardware and purchasing procedures becoming complex. Some users have solved this by moving the lab to their homes using the Lighthouse positioning or the Loco positioning systems. Others have been able to stay in their labs and classrooms although under different circumstances.

At Bitcraze we have been able to overcome the challenges we’ve faced so far, largely thanks to a strong and motivated team, but now it seems as if one of our biggest challenges might be ahead of us.

Semiconductor sourcing issues

Starting early this year lead-times for some components increased and there were indication that this might become a problem. This has been an issue for other parts like GPUs and CPUs for a while, but not for the semiconductors we use. Unfortunately sourcing of components now has become an issue for us as well. As far as we understand the problems have been caused by an unforeseen demand from the automotive industry combined with a few random events where the production capacity of certain parts has decreased. Together it has created a global semiconductor shortage with large effects on the supply chain.

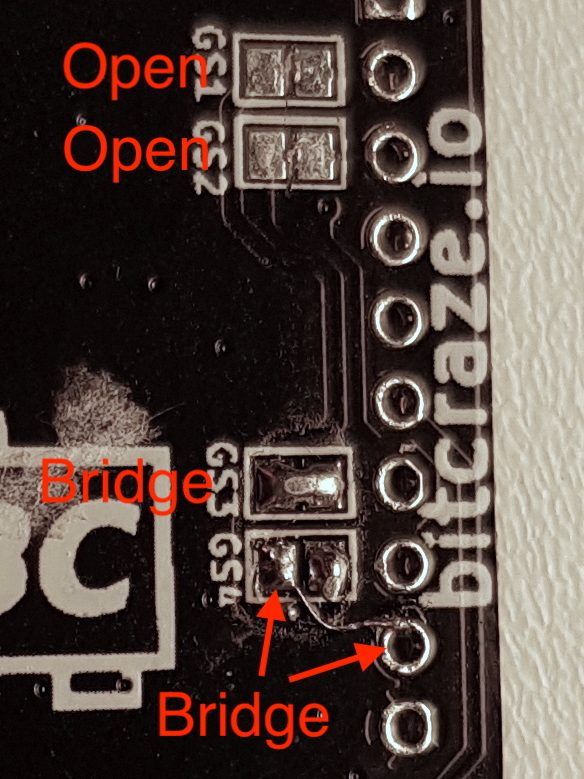

For us it started with the LPS Node where the components suddenly cost twice as much as normally. The MCU and pressure sensor were mainly to blame, incurring huge price increases. Since these parts were out of stock in all the normal distributor channels, the only way to find them was on the open market. Here price is set by supply and demand, where supply is now low and demand very high. Prices fluctuate day by day, sometimes there’s very large swings and it’s very hard to predict what will happen. To mitigate this for the LPS Node we started looking for an alternative MCU, as the STM32F072 was responsible for most of the increase (600% price increase). Since stock of the LPS Node was getting low we needed a quick solution and found the pin-compatible STM32L422 instead, where supply and price was good through normal channels. The work with porting code started, but after a few weeks we got word that importing this part to China was blocked. So after a dead end we’re back to the original MCU, with a few weeks of lead-time lost and a very high production price.

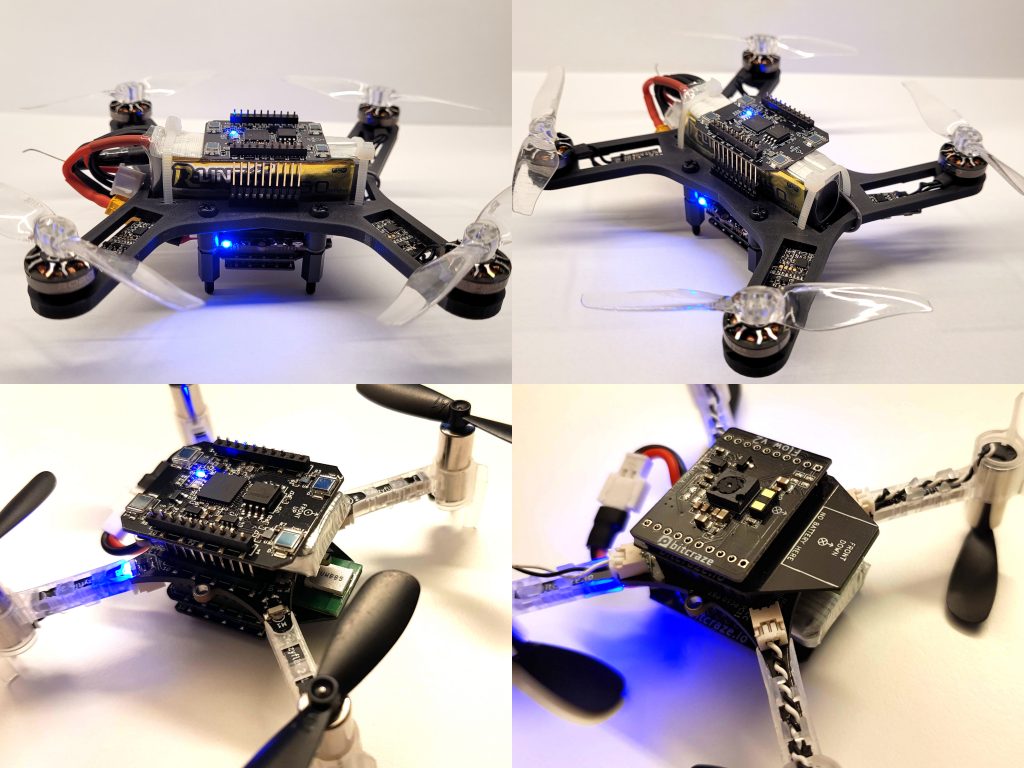

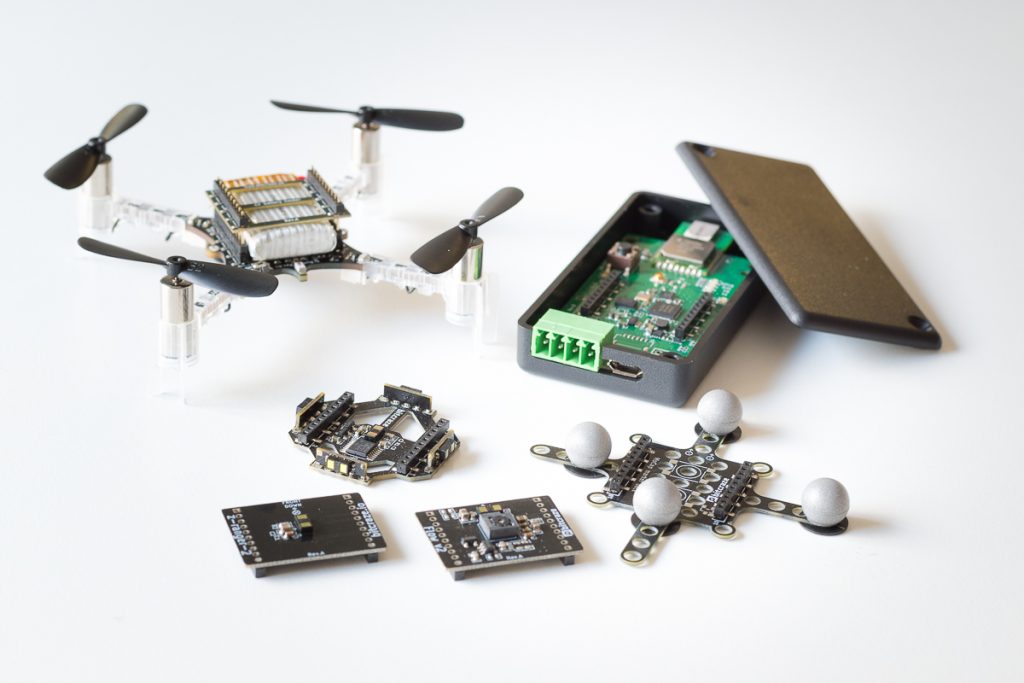

Unfortunately this problem is not isolated to the LPS Node, the next issue we’re facing is the production of the Crazyflie 2.1 where the STM32F405, BMI088, BMP388 and nRF51822 are all affected with increases between 100 and 400 % in price. These components are central parts of the Crazyflie and they can not easily be switch to other alternatives. Even if they could, a re-design takes a long time and it’s not certain that the new parts are still available for a reasonable price at that time.

Aside from the huge price increases in the open market we’re also seeing price increases in official distributor channels. With all of this weighed together, we expecting this will be an issue for most of our products in the near future.

Planning ahead

An even bigger worry than the price increase is the risk of not being able to source these components at all for upcoming batches. Having no stock to sell would be really bad for Bitcraze as a business and of course also really bad for our customers that rely on our products for doing their research and classroom teaching.

To mitigate the risk of increasing price and not being able to source components in the near future, we’re now forced to stock up on parts. Currently we are securing these key components to cover production until early next year, hoping that this situation will have improved until then.

Updated product prices

Normally we keep a stable price for a product once it has been released. For example the LPS Node is the same price now as it was in 2016 when it was released, even though we’ve improved the functionality of the product a lot. We only adjust prices for hardware updates, like when the Crazyflie 2.0 was upgraded to Crazyflie 2.1. But to mitigate the current situation we will have to side-step this approach.

In order for us to be able to continue developing even better products and to support those of you that already use the Crazyflie ecosystem, we need to keep a reasonable margin. From the 1st of May we will be adjusting prices across our catalog, increasing them with 10-15%. Although this doesn’t reflect the changes we are seeing in production prices at the moment, we believe the most drastic increases are temporary while the more moderate ones will probably stick as we move forward.

Even though times are a bit turbulent now, we hope the situation will settle down soon and we think the actions we are taking now will allow us to focus on evolving our platform for the future.