With the raging pandemic in the world, 2021 will most likely not be an ordinary year. Not that any year in the Bitcraze universe has been boring and without excitement so far, but it is unusually hard to make predictions about 2021. Any how, we will try to outline what we see in the crystal ball for the coming year.

Products

What products are cooking in the Bitcraze pot and what tasty new gadgets can we look forward to this year?

Lighthouse

We did hope that we would be able to release the first official version of the Lighthouse system in 2020, but unfortunately we did not make it. It has turned out to be more complex than anticipated but we do think we are fairly close now and that it will be finished soonish, including support for lighthouse V2.

Once the official version has been achieved, we are planning to assemble an full lighthouse bundle, which includes everything you need to start flying in the lighthouse positioning system. This will also include the Basestations V2 as developed by Valve corporation, so stay tuned!

New platforms and improvements

We released the AI-deck last year in early release, but the AIdeck will be soon upgraded with the latest version of the GAP8 chip. For most users this will not change much but for those that really push the deep learning to the edge will be quite happy with this improvement. More over, we are planning to by standard equipped the gray-scale camera instead of the RGB Bayer filter version, due to feedback of the community. We are still planning to offer the color camera on the side as a separate product for those that do value the color information for their application.

Also we noticed the released of several upgraded versions of sensors for the decks that we already are offering today. Pixart and ST have released a new TOF and motion sensor so we will start experimenting with those soon which hopefully lead to a new Multiranger or Flowdeck. Also we are aware of the new DWM3000 chip which would be a nice upgrade to the LPS system, so we will start exploring that as well, however we are not sure if we will be able to release the new version of LPS in 2021 already.

One of the field that we have wanted to improve for a while but have not gotten to so far is the communication with the Crazyflie. The Crazyradio is using a quite old chip and the communication protocol has hardly been touched in years. There now exists a much more powerful nRF52 radio chip with USB port so it can give us the opportunity to make a new Crazyradio and, at the same time, rework the communication protocols to make them more reliable, easier to use and to expand.

People and Collaborations

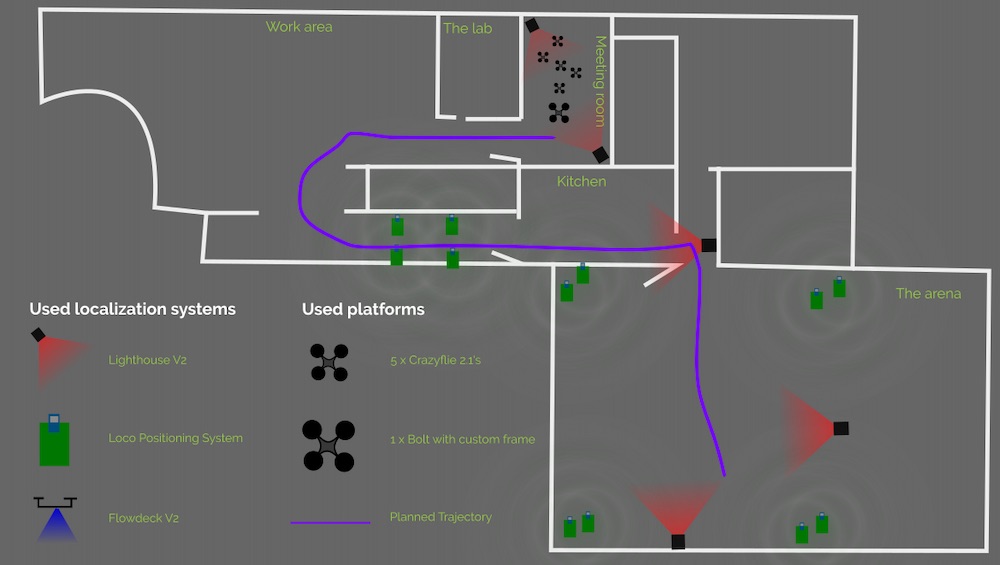

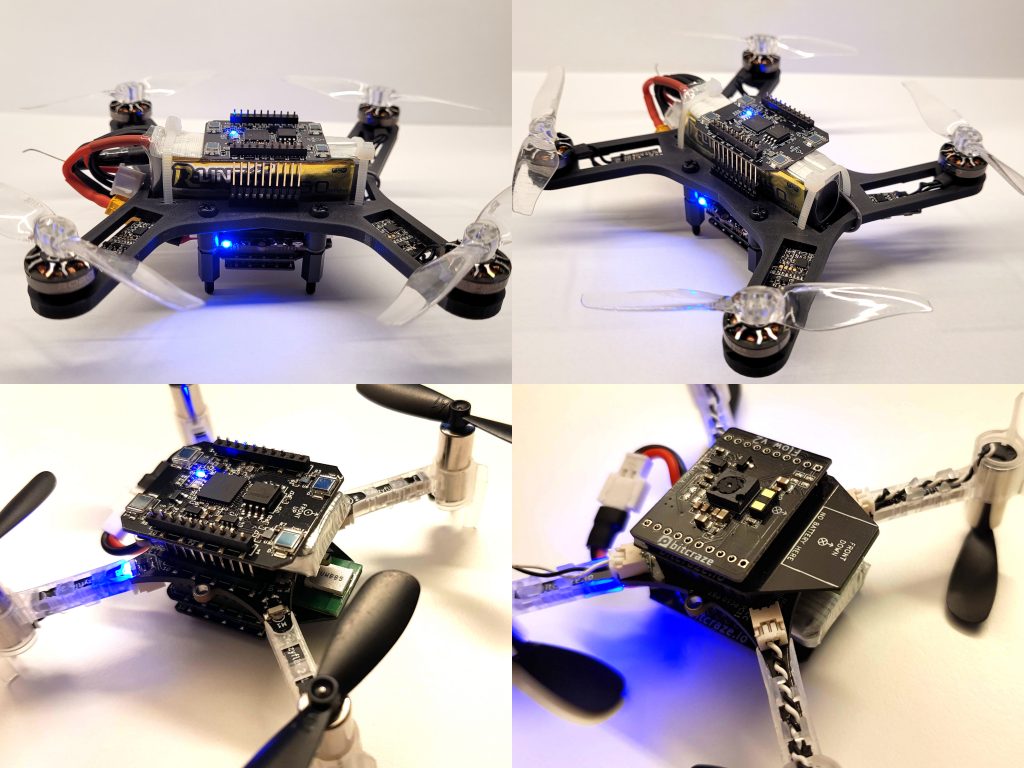

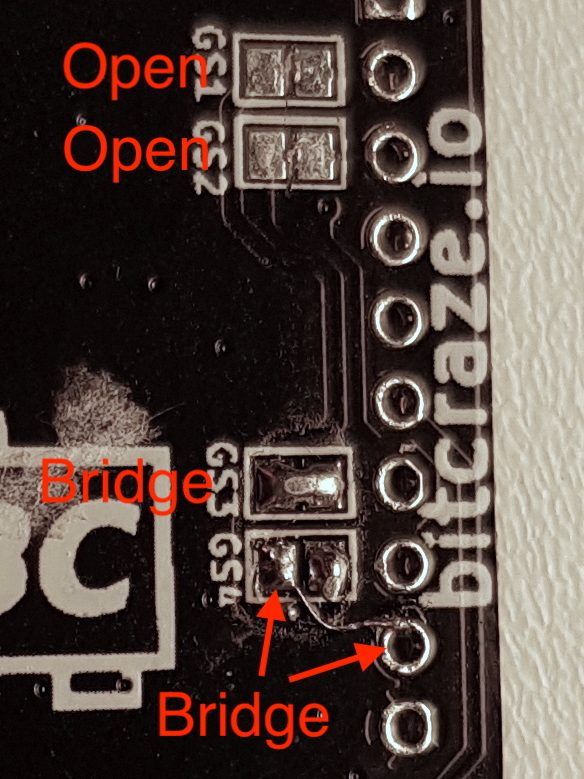

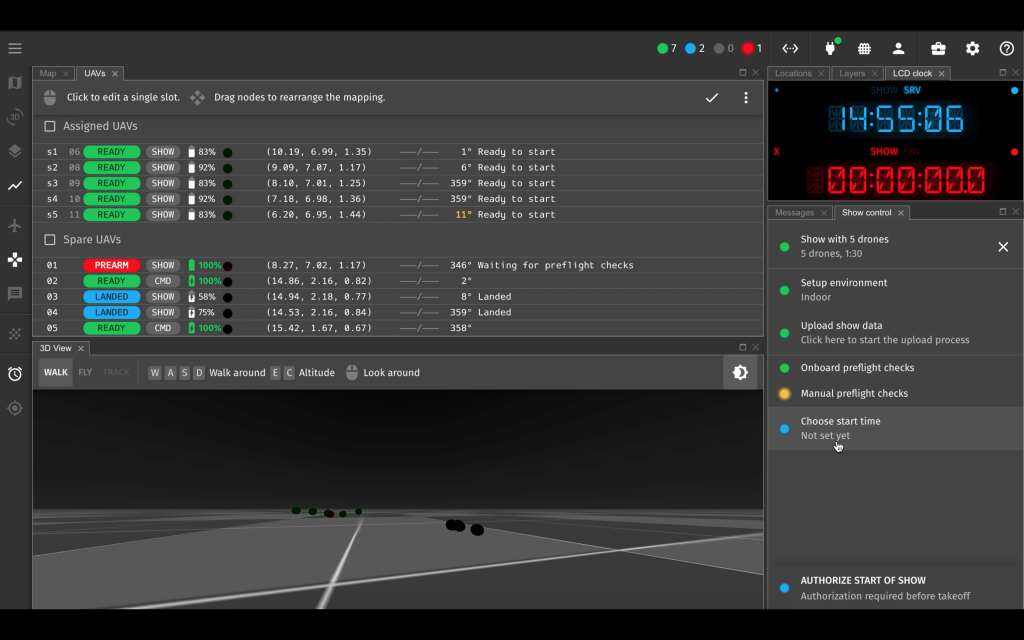

Last year we have started several collaborations with show drone orientated business, which we are definitely moving forward with in 2021. For shows stability and performance is very important so with the feedback of those that work with that on a regular basis will be crucial for the further development and reliability of our products.

Moreover we would like to continue our close collaboration with researchers at institutes and universities, to help them out with achieving their goals and contribute their work to our opensource firmware and software. Here we want to encourage the community to make their contributions easy to use by others, therefore increasing the reproducibility of the implementations, which is a crucial aspect of research. Also we are planning to have more of our online tutorial like the one we had in November.

We also will be working with closely together with one of our very active community members, Wolfgang Hönig! He has done a lot of great work for the Crazyswarm project from his time at University of Southern California (USC) and has spend the last few years at Caltech. He will be working together with us for a couple of months in the spring so we will be very happy to have him. Moreover, we will also have 2 master students from LTH working with us on the topic hardware simulation in the spring. We are making sure that we can all work together in the current situation, either sparsely at the office or fully online.

In 2021, we will also keep our eyes open for new potential Bitcrazers! We believe that everybody can add her/his own unique addition to the team and therefore it is important for us to keep growing and get new/fresh ideas or approaches to our problem. Usually we would meet new people at conferences but we will try new virtual ways to get to know our community and hopefully will meet somebody that can enhance our crazy group.

Working from home

Due to the pandemic we are currently mainly working from home and from the looks of it, this will continue a while. Even though we think we have managed to find a way to work remotely that is fairly efficient, it is still not at the same level as meeting in real-life, so there is always room for improvement. Further more the lack of access to electronics lab, flight lab and other facilities when working from home, does not speed work up. We will try to do our best though under the current circumstances and are looking forward to an awesome 2021!