This week we have a guest blog post from Jiawei Xu and David Saldaña from the Swarmslab at Lehigh University. Enjoy!

Limits of flying vehicles

Advancements in technology have made quadrotor drones more accessible and easy to integrate into a wide variety of applications. Compared to traditional fixed-wing aircraft, quadrotors are more flexible to design and more suitable for motioning, such as statically hovering. Some examples of quadrotor applications include photographers using mounting cameras to take bird’s eye view images, and delivery companies using them to deliver packages. However, while being more versatile than other aerial platforms, quadrotors are still limited in their capability due to many factors.

First, quadrotors are limited by their lift capacity, i.e., strength. For example, a Crazyflie 2.1 is able to fly and carry a light payload such as an AI deck, but it is unable to carry a GoPro camera. A lifter quadrotor that is equipped with more powerful components can transport heavier payload but also consumes more energy and requires additional free space to operate. The difference in the strength of individual quadrotors creates a dilemma in choosing which drone components are better suited for a task.

Second, a traditional quadrotor’s motion in translation is coupled with its roll and pitch. Let’s take a closer look at Crazyflie 2.1, which utilizes a traditional quadrotor design. Its four motors are oriented in the same direction – along the positive z-axis of the drone frame, which makes it impossible to move horizontally without tilting. While such control policies that convert the desired motion direction into tilting angles are well studied, proven to work, and implemented on a variety of platforms [1][2], if, for instance, we want to stack a glass filled with milk on top of a quadrotor and send it from the kitchen to the bedroom, we should still expect milk stains on the floor. This lack of independent control for rotation and translation is another primary reason why multi-rotor drones lack versatility.

Improving strength

These versatility problems are caused by the hardware of a multi-rotor drone designed specifically to deal with a certain set of tasks. If we push the boundary of these preset tasks, the requirements on the strength and controllability of the multi-rotor drone will eventually be impossible to satisfy. However, there is one inspiration we take from nature to improve the versatility in the strength of multi-rotor drones – modularity! Ants are weak individual insects that are not versatile enough to deal with complex tasks. However, when a group of ants needs to cross natural boundaries, they will swarm together to build capable structures like bridges and boats. In our previous work, ModQuad [3], we created modules that can fly by themselves and lift light payloads. As more ModQuad modules assemble together into larger structures, they can provide an increasing amount of lift force. The system shows that we can combine weak modules with improving the versatility of the structure’s carrying weight. To carry a small payload like a pin-hole camera, a single module is able to accomplish the task. If we want to lift a heavier object, we only need to assemble multiple modules together up to the required lift.

Improving controllability

On a traditional quadrotor, each propeller is oriented vertically. This means the device is unable to generate force in the horizontal direction. By attaching modules side by side in a ModQuad structure, we are aligning more rotors in parallel, which still does not contribute to the horizontal force the structure can generate. That is how we came up with the idea of H-ModQuad — we would like to have a versatile multi-rotor drone that is able to move in an arbitrary direction at an arbitrary attitude. By tilting the rotors of quadrotor modules and docking different types of modules together, we obtain a structure whose rotors are not pointing in the same direction, some of which are able to generate a force along the horizontal direction.

H-ModQuad Design

H-ModQuad has two major characteristics: modularity and heterogeneity, which can be indicated by the “Mod” and “H-” in the name. Modularity means that the vehicle (we call a structure) is composed of multiple smaller modules which are able to fly by themselves. Heterogeneity means that we can have modules of different types in a structure.

As mentioned before, insects like ants utilize modularity to enhance the group’s versatility. Aside from a large number of individuals in a swarm that can adapt to the different scales of the task requirement, the individuals in a colony specializing in different tasks are of different types, such as the queen, the female workers, and the males. The differentiation of the types in a hive helps the group adapt to tasks of different physical properties. We take this inspiration to develop two types of modules.

In our related papers [4][5], we introduced two types of modules which are R-modules and T-modules.

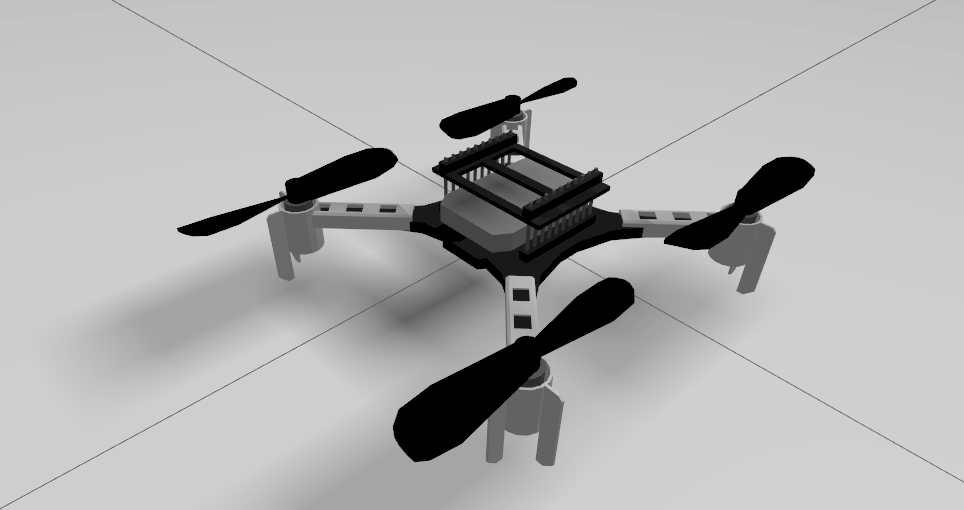

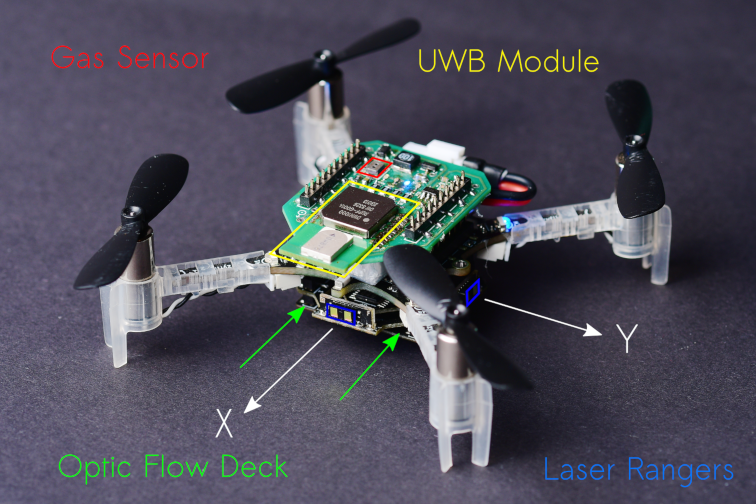

An example T-module is shown in the figure above. As shown in the image, the rotors in a T-module are tilted around its arm connected with the central board. Each pair of diagonal rotors are tilted in the opposite direction, and each pair of adjacent rotors are either tilting in the same direction or in the opposite direction. We arrange the tilting of the rotors so that all the propellers generate the same thrust force, making the structure torque-balanced. The advantage of the T-module is that it allows the generation of more torque around the vertical axis. One single module can also generate forces in all horizontal directions.

An R-module has all its propellers oriented in the same direction that is not on the z-axis of the module. In this configuration, when assembling multiple modules together, rotors from different modules will point in different directions in the overall structure. The picture below shows a fully-actuated structure composed of R-modules. The advantage of R-modules is that the rotor thrusts inside a module are all in the same direction, which is more efficient when hovering.

Depending on what types of modules we choose and how we arrange those modules, the assembled structure can obtain different actuation capabilities. Structure 1 is composed of four R-modules, which is able to translate in horizontal directions efficiently without tilting. The picture in the intro shows a structure composed of four T-modules of two types. It can hover while maintaining a tilting angle of up to 40 degrees.

Control and implementation

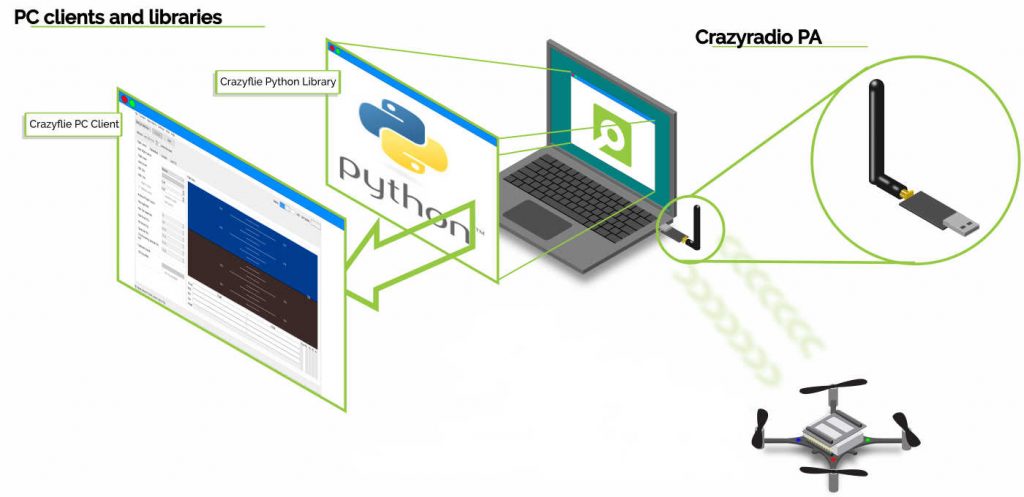

We implemented our new geometric controller for H-ModQuad structures based on Crazyflie Firmware on Crazyflie Bolt control boards. Specifically, aside from tuning the PID parameters, we have to change the power_distribution.c and controller_mellinger.c so that the code conforms to the structure model. In addition, we create a new module that embeds the desired states along predefined trajectories in the firmware. When we send a timestamp to a selected trajectory, the module retrieves and then sends the full desired state to the Mellinger Controller to process. All modifications we make on the firmware so that the drone works the way we want can be found at our github repository. We also recommend using the modified crazyflie_ros to establish communication between the base station and the drone.

Videos

Challenges and Conclusion

Different from the original Crazyflie 2.x, Bolt allows the usage of brushless motors, which are much more powerful. We had to design a frame using carbon fiber rods and 3-D printed connecting parts so that the chassis is sturdy enough to hold the control board, the ESC, and the motors. It takes some time to find the sweet spot of the combination of the motor model, propeller size, batteries, and so on. Communicating with four modules at the same time is also causing some problems for us. The now-archived ROS library, crazyflie_ros, sometimes loses random packages when working with multiple Crazyflie drones, leading to the stuttering behavior of the structure in flight. That is one of the reasons why we decided to migrate our code base to the new Crazyswarm library instead. The success of our design, implementation, and experiments with the H-ModQuads is proof of work that we are indeed able to use modularity to improve the versatility of multi-rotor flying vehicles. For the next step, we are planning to integrate tool modules into the H-ModQuads to show how we can further increase the versatility of the drones such that they can deal with real-world tasks.

Reference

[1] D. Mellinger and V. Kumar, “Minimum snap trajectory generation and control for quadrotors,” in 2011 IEEE International Conference on Robotics and Automation, 2011, pp. 2520–2525.

[2] T. Lee, M. Leok, and N. H. McClamroch, “Geometric tracking control of a quadrotor uav on se(3),” in 49th IEEE Conference on Decision and Control (CDC), 2010, pp. 5420–5425.

[3] D. Saldaña, B. Gabrich, G. Li, M. Yim and V. Kumar, “ModQuad: The Flying Modular Structure that Self-Assembles in Midair,” 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 691-698, doi: 10.1109/ICRA.2018.8461014.

[4] J. Xu, D. S. D’Antonio, and D. Saldaña, “Modular multi-rotors: From quadrotors to fully-actuated aerial vehicles,” arXiv preprint arXiv:2202.00788, 2022.

[5] J. Xu, D. S. D’Antonio and D. Saldaña, “H-ModQuad: Modular Multi-Rotors with 4, 5, and 6 Controllable DOF,” 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 190-196, doi: 10.1109/ICRA48506.2021.9561016.