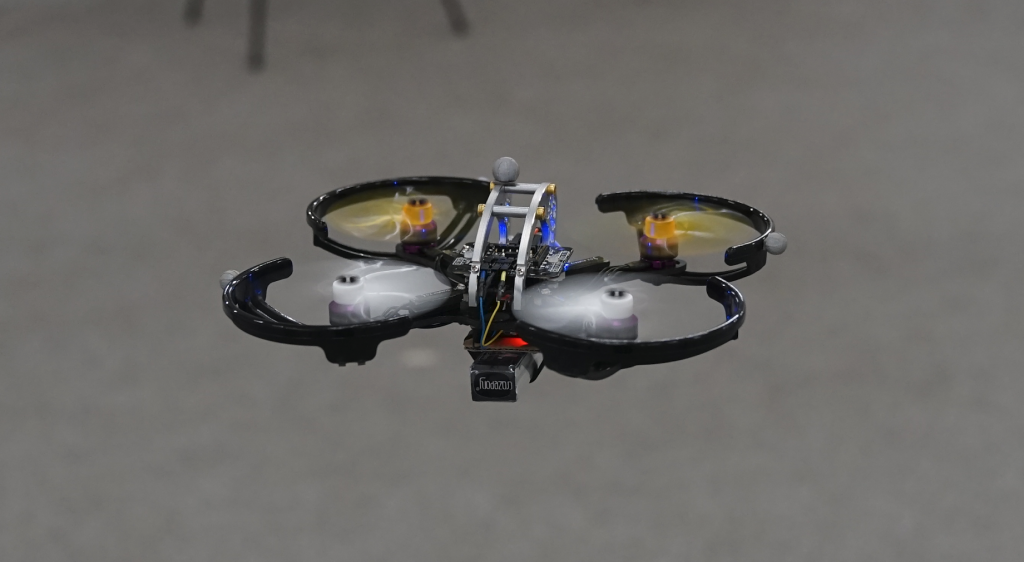

In this blog post we will describe one of the demos we were running at IROS and how it was implemented. Conceptually this demo is based on the same ideas as for ICRA 2017 but the implementation is completely new and much cleaner.

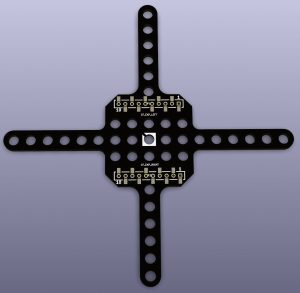

The demo is fully autonomous (no computer in the loop) but it requires an external positioning system. We flew it using either the Loco Positioning System or the prototype Lighthouse system.

A button has been added to the LPS deck to start the demo. When the button is pressed the Crazyflie waits for position lock, takes off and repeats a predefined spiral trajectory until the battery is out, when it goes back to the door of the cage and lands.

For some reason we forgot to shoot a video at IROS so a reproduced version from the (messy) office will have to do instead, imagine a 2×2 m net cage around the Crayzflie.

Implementation

As mentioned in an earlier blog post the demo uses the high level commander originally developed by Wolfgang Hoenig and James Alan Preiss for Crazyswarm. We prototyped everything in python (sending commands to the Crazyflie via Crazyradio) to quickly get started and design the demo . Designing trajectories for the high level commander is not trivial and it took some time to get it right. What we wanted was a spiral downwards motion and then going back up along the Z-axis in the centre of the spiral. The high level commander is a bit picky on discontinuities and we used sines for height and radius to generate a smooth trajectory.

Trajectories in the high level commander are defined as a number of pieces, each describing x, y, z and yaw for a short part of the full trajectory. When flying the trajectories the pieces are traversed one after the other. Each piece is described by 4 polynomials with 8 terms, one polynomial per x, y, z and yaw. The tricky part is to find the polynomials and we decided to do it by cutting our trajectory up in segments (4 per revolution), generate coordinates for a number of points along the segment and finally use numpy.polyfit() to fit polynomials to the points.

When we were happy with the trajectory it was time to move it to the Crazyflie. Everything is implemented in the app.c file and is essentially a timer loop with a state machine issuing the same commands that we did from python (such as take off, goto and start trajectory). A number of functions in the firmware had to be exposed globally for this to work, maybe not correct from an architectural point of view but one has to do what one has to do to get the demo running :-) The full source code is available at github. Note that the make file is hardcoded for the Crazyflie 2.1, if you want to play with the code on a CF 2.0 you have to update the sensor setting

This approach led to an idea of a possible future app API (for apps running in the Crazyflie) containing similar functionality as the python lib. This would make it easy to prototype an app in python and then port it to firmware.

Controllers

The standard PID controller is very forgiving and usually handles noise and outliers from the positioning system in a fairly good way. We used it with the LPS system since there is some noise in the estimated position in an Ultra Wide Band system. The Lighthouse system on the other hand is much more precise so we switched to the Mellinger controller instead when using it. The Mellinger controller is more agile but also more sensitive to position errors and tend to flip when something unexpected happens. It is possible to use the Mellinger with the LPS as well but the probability of a crash was higher and we prioritised a carefree demo over agility. An extra bonus with the Mellinger controller is that it also handles yaw (as opposed to the PID controller) and we added this when flying with the Lighthouse.

Going faster

Since the precision in the Lighthouse positioning system is so much better we increased the speed to add some extra excitement. It turned out to be so good that it repeatedly almost touched the panels at the back without any problems, over and over again!

One of the reasons we designed the trajectory the way we did was actually to make it possible to fly multiple copters at the same time, the trajectories never cross. As long as the Crazyflies are not hit by downwash from a copter too close above all is good. Since the demo is fully autonomous and the copters have no knowledge about each other we simply started them with appropriate intervals to separate them in space. We managed to fly three Crazyflies simultaneously with a fairly high degree of stability this way.