Today, we’re excited to share research from Vrije Universiteit Amsterdam, ‘From Shadows to Light,’ which presents an innovative swarm robotics approach where nano-drones autonomously track dynamic sources indoors.

Motivation

In dynamic and unpredictable indoor environments, locating moving sources—such as heat, gas, or light—presents unique challenges. GPS-denied settings, in particular, demand innovative and efficient onboard solutions for both control and sensing. Our research demonstrates how small drones, like Crazyflies, can be organized into a coordinated swarm to autonomously locate and follow these sources indoors, relying solely on onboard sensing and communication capabilities. Without sharing individual measurements, each drone adapts its behavior in response to its own sensor readings, allowing the swarm to collectively converge on the center of a light source through modified interactions with nearby agents.

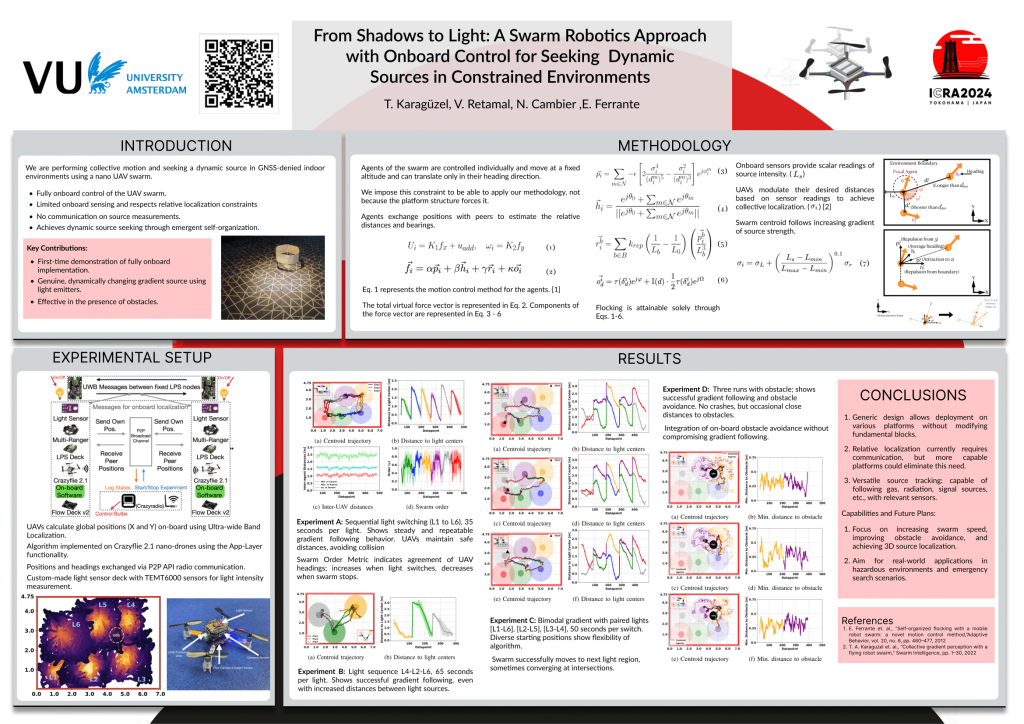

Method

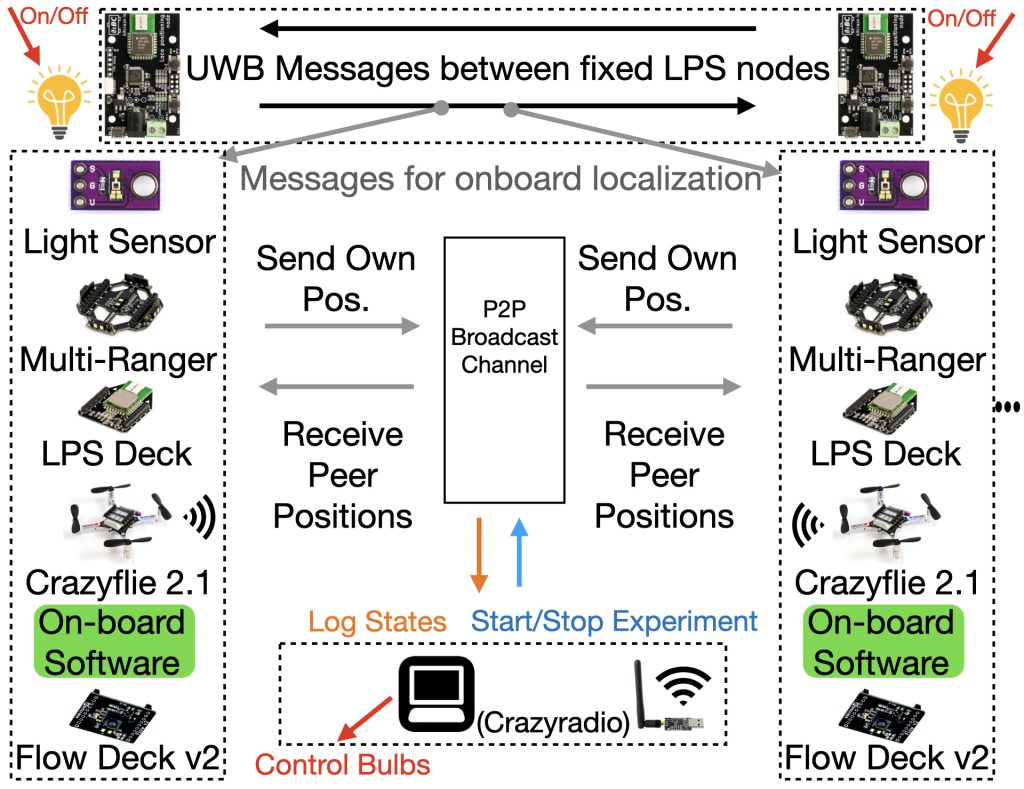

Our approach enables each Crazyflie to function autonomously, using onboard sensing combined with continuous inter-agent communication at a frequency of 20 Hz. This methodology is structured around three core components:

Proximal Control and Collective Motion

Each drone broadcasts its position to nearby agents, enabling the calculation of relative positions to maintain safe distances. This proximal control ensures cohesive group movement by computing virtual force vectors for velocity commands, which are sent to onboard controllers operating at 20 Hz.

Source Seeking Through Adaptive Social Proximity

Drones use custom light sensors to detect local light intensity. Instead of directly adjusting positions based on this measurement, each drone modifies its social proximity to neighbors according to the sensed intensity without broadcasting this information. This adaptation allows the swarm to collectively follow the light gradient toward the source in a decentralized manner.

Obstacle Avoidance

Equipped with time-of-flight sensors, each drone independently detects obstacles and adjusts its trajectory to maintain safety. This ensures the swarm remains intact while navigating toward the source.

By combining continuous relative positioning, virtual force-based control, individual sensing, and adaptive social behavior, our methodology provides a robust framework for efficient source seeking in GPS-denied indoor environments.

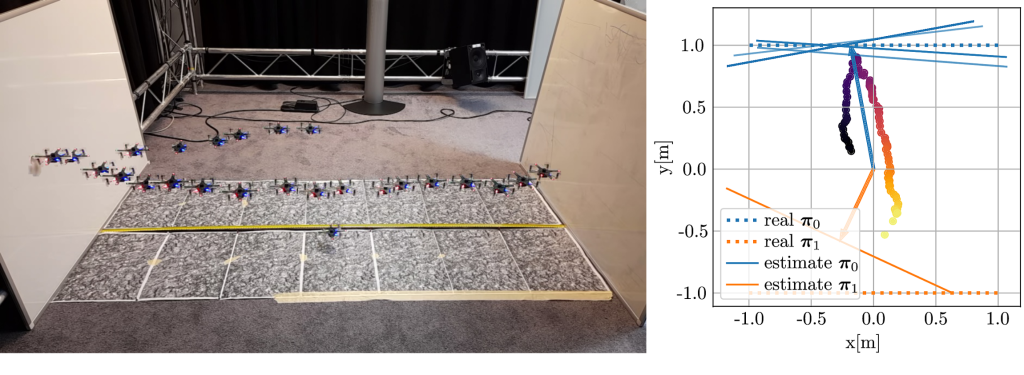

Experimental Setup

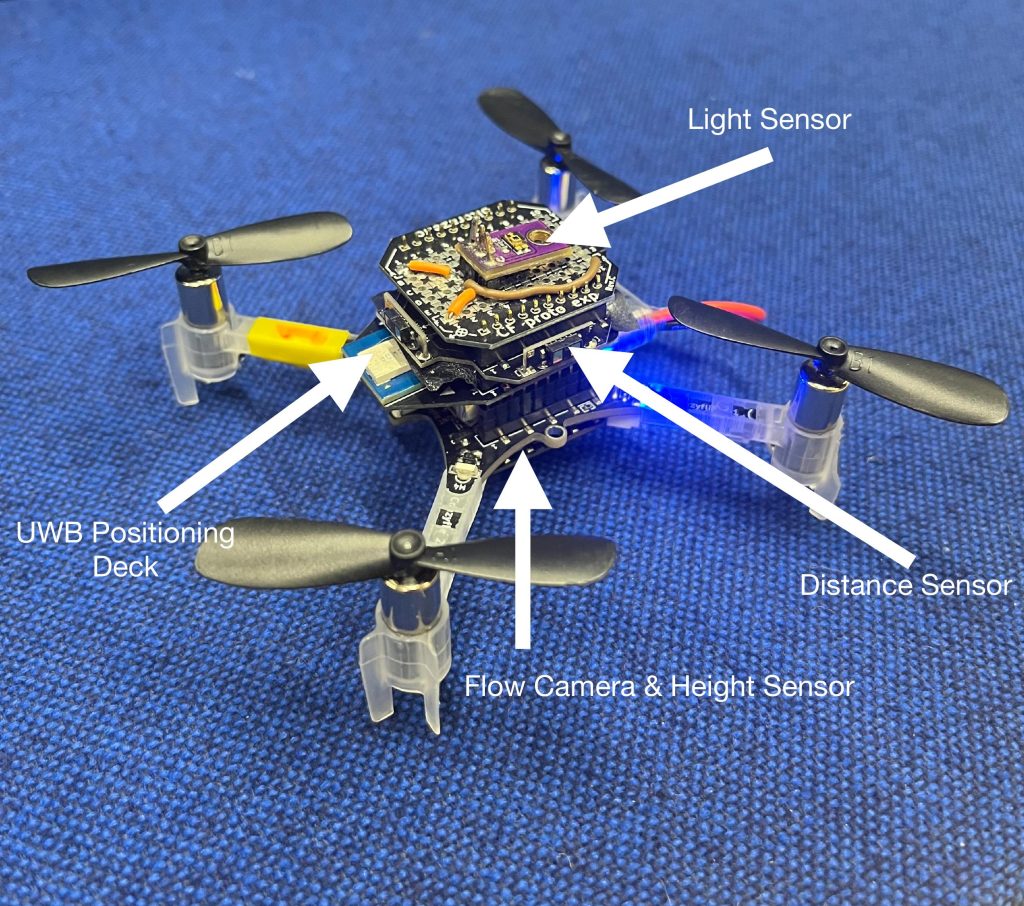

Crazyflie equipped with Flow Deck v2, UWB Deck, Multi-Ranger Deck, and a custom-made deck that produces an analog voltage reading from an LDR for light intensity measurements.

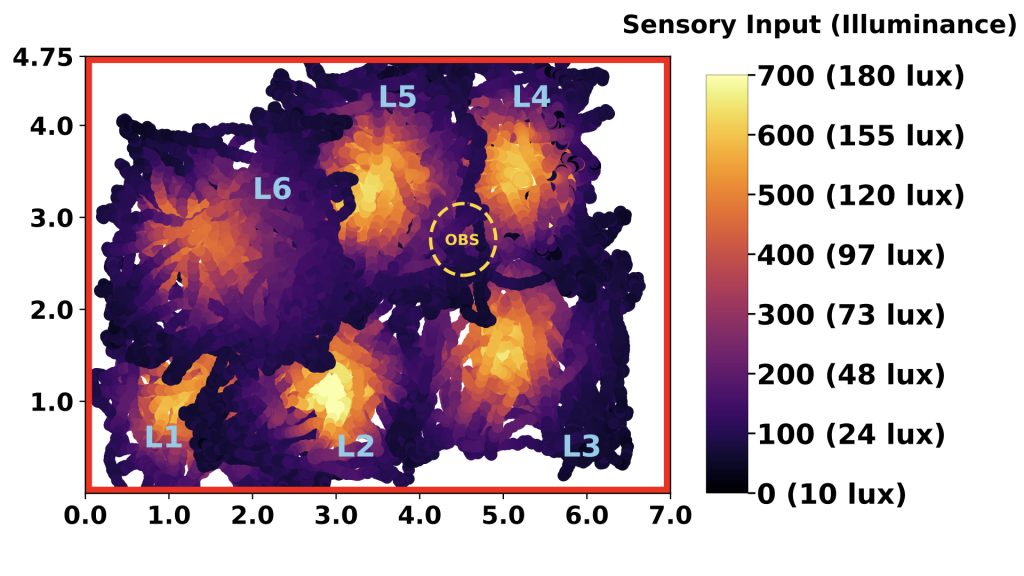

Our experiments take place in a 7×4.75-meter indoor arena with remotely controlled overhead light bulbs. These bulbs, activated individually or in pairs, create a moving light gradient. We tested our flocking swarm by initially positioning them at the edge of an illuminated area. As the light source shifted, we assessed the swarm’s performance by comparing their trajectories with the known centers of the illuminated areas without waiting for full convergence at each step. We also mapped our environment’s light intensity by moving a single Crazyflie randomly around the flight arena and recording the measurements to later merge on a single map to generate this light intensity heatmap.

The brightness values around the test environments, measured for each light source when only it was active.

Results

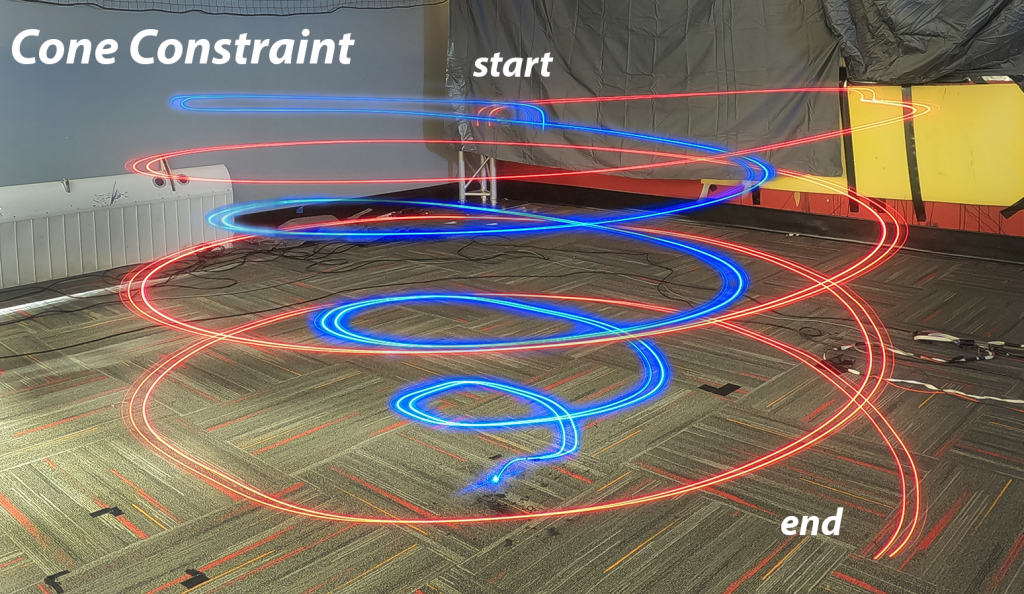

The flock flies as an ordered swarm, successfully localizing around the source with the swarm’s centroid positioned at the source center. (The centroid appears as a point without an arrow in the video.)

Even with an obstacle present within or between the illuminated regions, the flock successfully localizes around the center, avoiding the obstacle and maintaining order and cohesion within the swarm. The Multi-Ranger deck provides distance measurements for obstacle detection.

Future Directions

As the next step, we plan to apply our highly generalizable algorithm to various source types, including gas sources, radio signals, and similar sources that provide only scalar strength measurements rather than directional cues. Additionally, we have demonstrated that our flocking and source localization algorithms work effectively in 3D. We aim to showcase a fully functional application with a 3D-localized source and a flocking swarm operating in 3D space. Finally, we are working toward achieving fully onboard relative localization, which would eliminate the need for any indoor positioning system. This advancement would allow our swarm to operate autonomously in any environment, replicating the same behavior wherever it is deployed.

Links

- Paper: www.doi.org/10.1109/LRA.2023.3331897

- Videos: https://youtube.com/playlist?list=PLQXaE6NbHSe39z1X2zpjZD6f6mB9f40u9&si=A–U9C0OCmDN8Mz3

- GitHub Project: https://github.com/tugayalperen/Gradient_following_firmware

- 3D Flocking Paper: https://doi.org/10.1007/978-3-031-70932-6_12

- 3D Flocking Videos: https://youtube.com/playlist?list=PLQXaE6NbHSe0_JAZre9jx-lerFHW06Tu4&si=vnE0KfkWFXFd3b-f

The authors were with the Vrije Universiteit Amsterdam.

Please feel free to contact us with any questions or ideas: t.a.karaguzel@vu.nl

Please cite this as:

@ARTICLE{10314746,

author={Karagüzel, Tugay Alperen and Retamal, Victor and Cambier, Nicolas and Ferrante, Eliseo},

journal={IEEE Robotics and Automation Letters},

title={From Shadows to Light: A Swarm Robotics Approach With Onboard Control for Seeking Dynamic Sources in Constrained Environments},

year={2024},

volume={9},

number={1},

pages={127-134},

keywords={Robot sensing systems;Autonomous aerial vehicles;Position measurement;Vehicle dynamics;Sensors;Location awareness;Drones;Swarm robotics;aerial systems: perception and autonomy;multi-robot systems},

doi={10.1109/LRA.2023.3331897}}